November 10, 2024

I don’t know about you, but I read quite a lot of “random” papers. I get curious about Machine Learning (ML) topics I don’t know. I’ve long since learned the only remedy is to indulge my curiosity. While I read, I ask myself: how can this help my research?

Reading gives me ideas and fresh perspectives on my research, even if no tangible methodological ideas come from it. This inspired a new type of blog I call “Reading group.” In these blogs, I’ll connect ML topics I learned about to ML for Earth Observation (ML4EO) and share interesting papers. Today, I want to write about data-centric ML:

Data-centric ML is a subfield that studies how to create better datasets or features to train better machine learning models.

Seedat et al. [1] define it as follows:

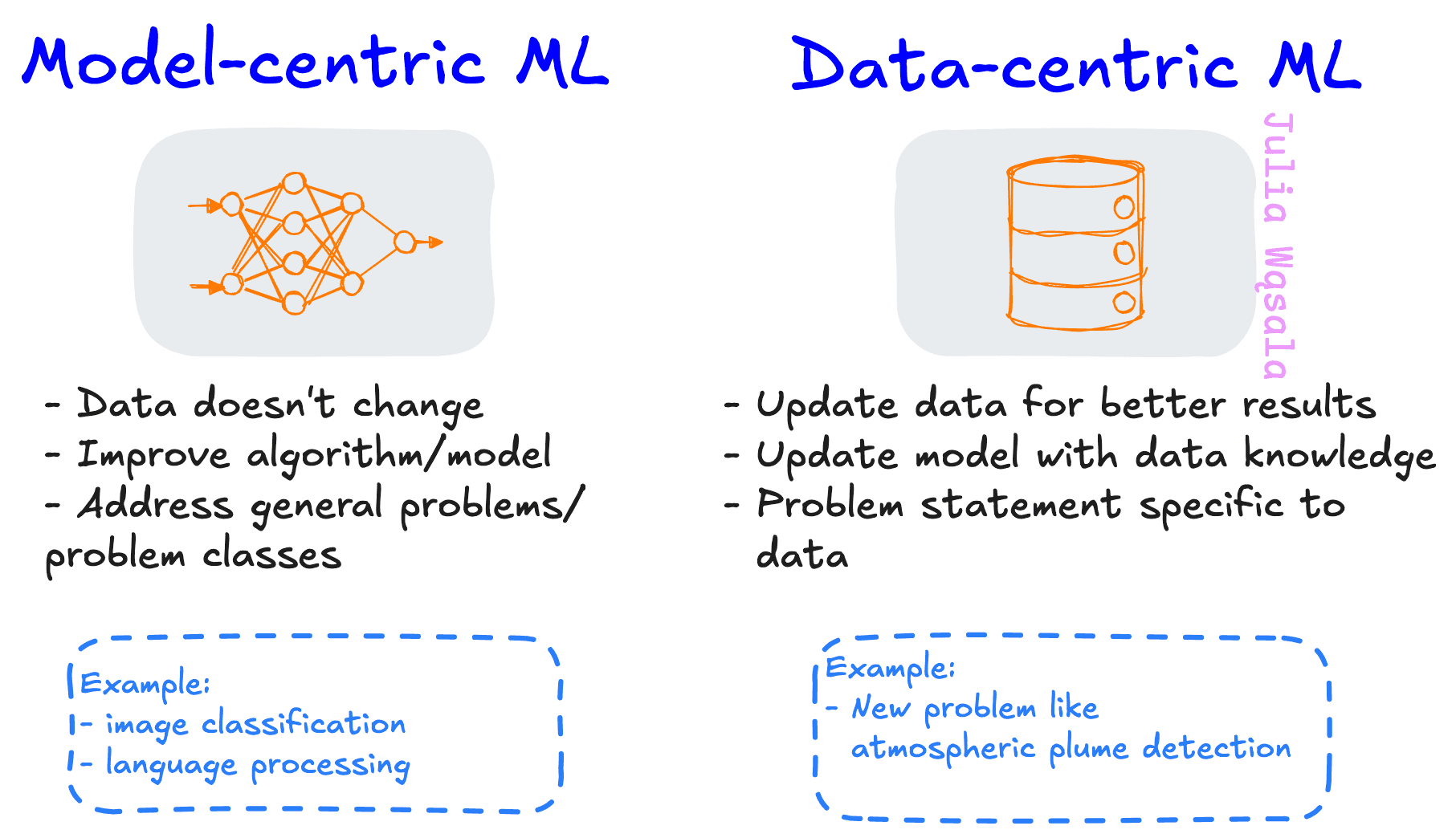

“Data-centric AI views model or algorithmic refinement as less important (and in certain settings, algorithmic development is even considered as a solved problem), and instead seeks to systematically improve the data used by ML systems. Conversely, in model-centric AI, the data is considered an asset adjacent to the model and is often fixed or static (e.g. benchmarks), whilst improvements are sought specifically to the model or algorithm itself.”

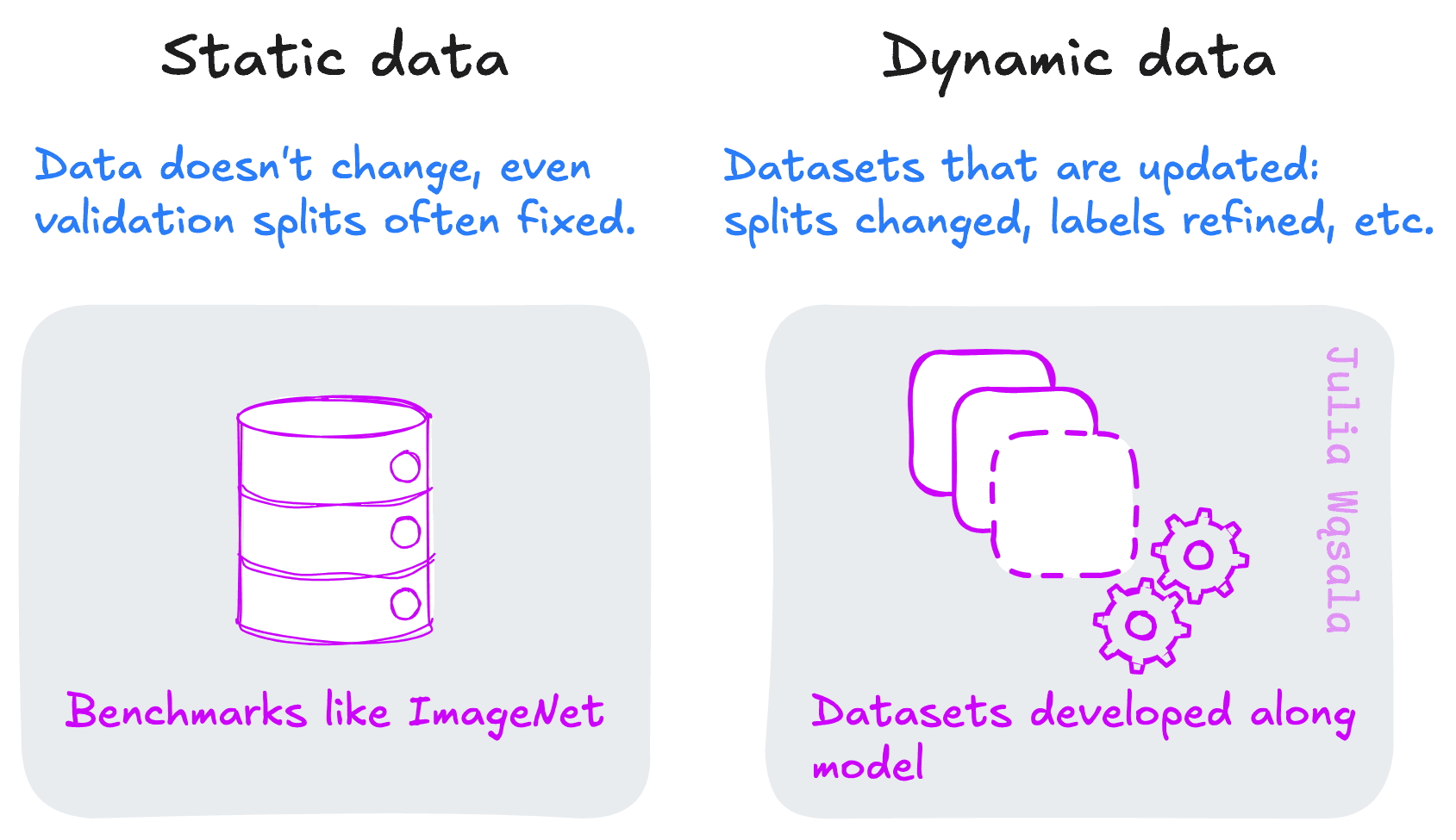

In other words, data-centric ML does not assume data is static. Compare this to “normal” ML, which uses benchmarks like MNIST. The whole point of benchmarks is that they’re static, so we can use them as tools of fair comparison.

According to Oala et al. [2], “normal” ML “oscillate[s] between two dominant phases: (i) design algorithm and throw data at it, (ii) go back to data (and its intermediate representations) to design better algorithms.” Normal ML defines abstract problem statements representing a class of problems. Think about how broad “image classification” is. We then validate our methods on a dataset and look for points of improvement. Data-centric machine learning focuses more on the second stage. It uses concrete datasets rather than abstract problem classes to inform modelling decisions.

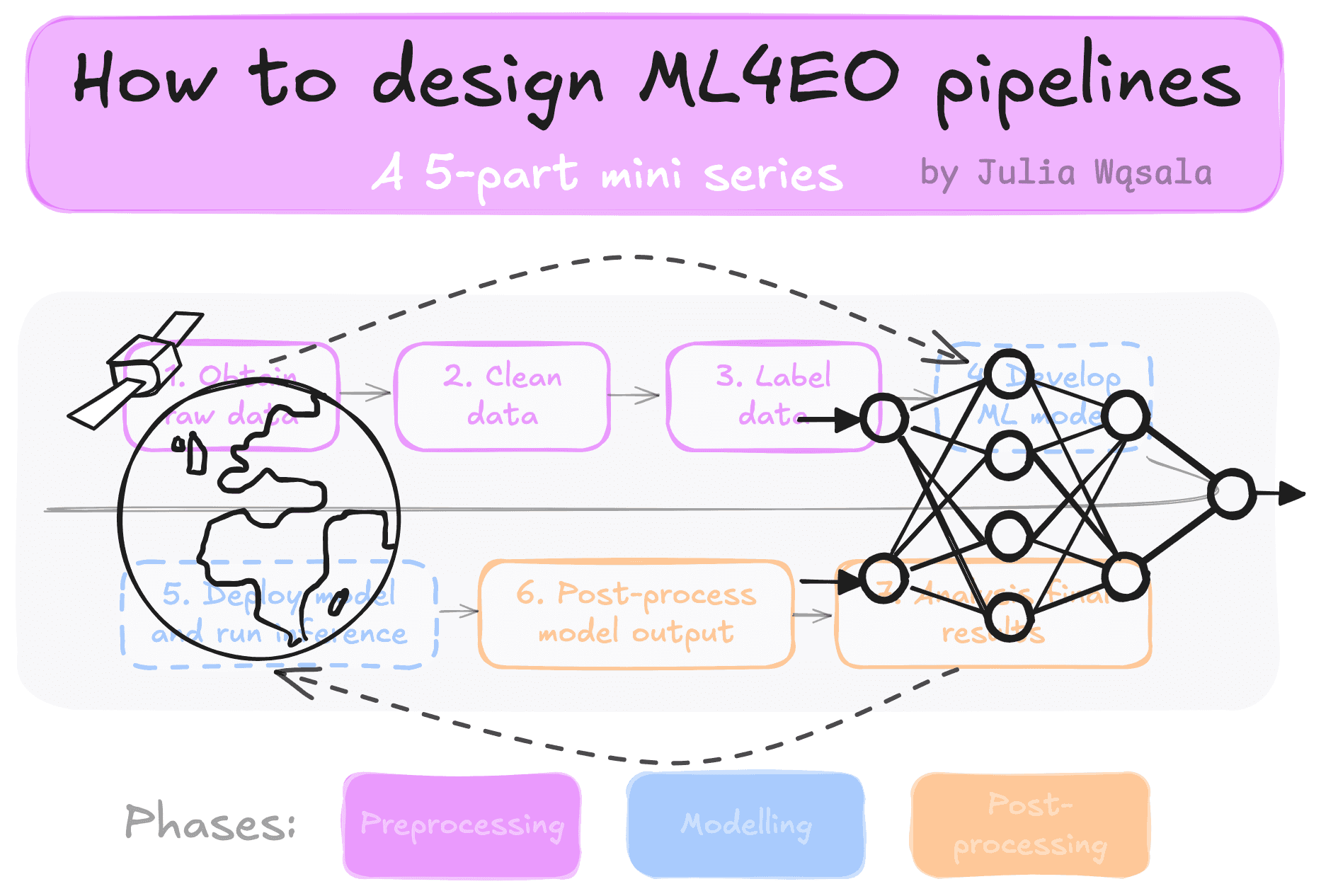

Many ML techniques, like attention modules, are Lego blocks you can plug into your pipeline. On the other hand, data-centric ML encompasses the whole ML pipeline. Data processing, training models, evaluating, deploying, and testing are all part of it. It’s more of an approach to designing ML problems and pipelines than a specific technique. The lifetime of an ML problem is roughly:

The maturity of your problem is basically where you are on the list. Image classification, for example, is very mature. Many datasets and models build on the limitations of previous ones. But you need to find key problems we must address to solve the task before you can get to that iteration stage. For instance, convolutional neural networks had to be invented before image classification boomed. Convolutions use the same small features repeated in different parts of images of the same class. For example, most faces have a nose, but it's in different places depending on the framing and a person's face. You need to get to know your data to find insights like that.

You could say the ML problem lifetime can be divided into two stages:

While you can use data-centric ML in both stages, I think it shines in the first. It’s difficult, if not impossible, to define effective problem statements based on abstract ideas of a problem. And neither will you get your dataset right on the first try. You’ll need to learn the most important problem to address (e.g., is it rotation or translation invariance?). Then, craft your dataset to reflect that.

If you never fix your dataset, it’ll be difficult for others to contribute to the same problem. That’s why I think standard ML practices propel us forward in the second stage. By considering the dataset as static, we can make some miles to make the best model for that particular problem. Once we discover a problem with his approach, we can always create a new dataset.

This is my theory: data-centric and model-centric ML are complementary views. To solve ML problems, we need stages of dynamic and fixed datasets. We have somewhat lost the data-centric perspective because of big wins in model-centric fields. Model-based breakthroughs in fields like classification and language processing attract people. But every successful ML field has been before the convolution, before the transformer. Data-centric ML can help us get past that point.

One of the key principles is that data insights can be incorporated at each design step.

These insights are not just changes to the methods but also updates to the data. Standard ML papers use static benchmarks. As a result, you won’t learn anything about data cleaning and post-processing. But these are all steps you’ll have to go through when you can’t use benchmarks, as I wrote about in my blogs about designing ML4EO pipelines.

I wrote that most blogs and resources are like: “Here’s a cool dataset; here’s a cool architecture.” They didn’t teach me what I needed. Reading about data-centric ML gave me the words to describe it. Many blogs follow the standard model-centric perspective. As a result, they assume your problem is already defined, and your data is static. But I’ve never worked on an ML4EO project with static data.

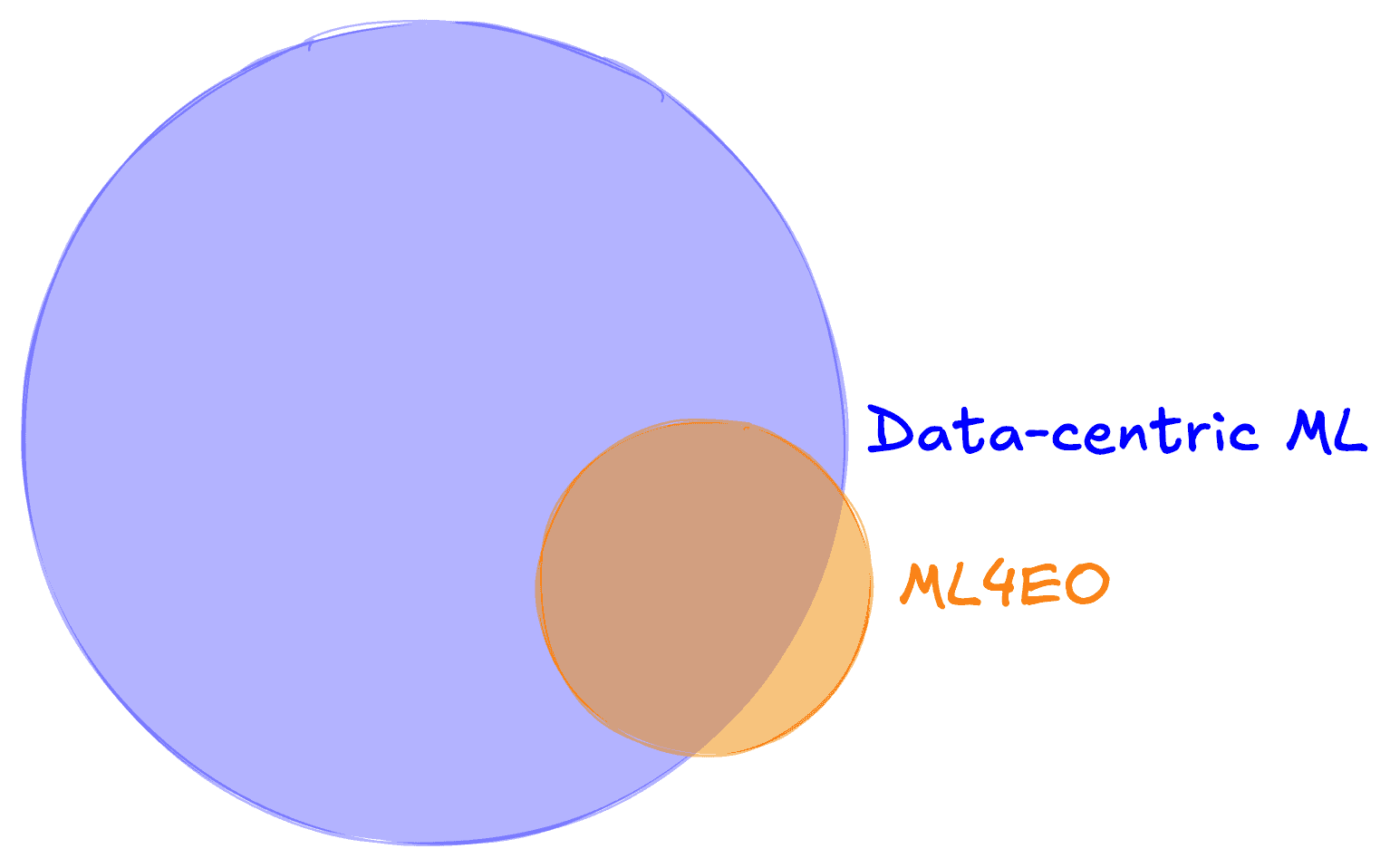

And given how young the ML4EO field is, does that make most ML4EO data-centric?

And if that’s the case, why should we care to reframe ML4EO as data-centric ML?

I’ve been trying to make sense of the differences between normal ML and ML4EO. The most concrete description I could come up with is that ML4EO, often proposed by EO authors, is much more focused on the data than the model. The data focus manifests in a few ways:

These ideas were living in my head rent-free when I read “DC-check: A data-centric AI checklist to guide the development of reliable machine learning systems” [1]. This paper introduces a very simple but interesting to guide data-centric ML pipeline development. It’s a list of questions to help you integrate knowledge about the data in your design. They organise the questions in 4 categories: data pre-processing, training, deploying and testing the model.

And whaddayaknow, what do I see in the list? Think about non-standard data splits and go beyond summary statistics in the evaluation. The latter point reminded me of EO use case applications right away, even though this is a general paper. In other words, many of these ML4EO-specific ideas I was trying to define fit quite well within the data-centric ML framework.

So, does that make all of ML4EO data-centric? Maybe not. But I think it’s, at the very least, a very useful framework. Call me a stuck-up computer scientist, but I like well-defined frameworks and terms. They make it easier to motivate and explain ideas. Once we start redefining ML4EO problems as data-centric, we can:

There are probably a lot of frustrated EO scientists out there who wonder why we never talk about the data. Data-centric ML can be the bridge that brings us closer.

A simple place to start building data-centric ML4EO pipelines is to start with checklists. Like DC-Check or questions to assess data quality criteria as proposed by Roscher et al. [3] We already know answers to questions like “*Is my data complete?” *but we never write them down. In my experience, the simple act of writing it down helps to:

Finally, a data-centric approach can help us PhD students struggling to publish in ML venues. The model-centric focus pushes for general contributions applicable to broad classes of problems. But such general methods many times don't work out of the box on challenging ML4EO problems. Reframing our work as data-centric can prevent our work from being written off as simple applications.

(I was very tempted to title this “do your own research”)

DC-Check [1] proposes a set of questions to guide researchers in data-centric ML. This paper provides a practical explanation of the advantages of data-centric ML and how to use it. It provides handholds for the whole design process, not just problem statement crafting.

[1]: Seedat, N., Imrie, F., & van der Schaar, M. (2022). DC-check: A data-centric AI checklist to guide the development of reliable machine learning systems. arXiv preprint arXiv:2211.05764.Oala et al. [2] is an editorial on the evolution of data-centric ML research, sharing insights from a workshop. It’s a good jumping-off point with links/citations to useful resources.

[2] [Oala, L., Maskey, M., Bat-Leah, L., Parrish, A., Gürel, N. M., Kuo, T. S., ... & Mattson, P. (2024). DMLR: Data-centric Machine Learning Research-Past, Present and Future. Journal of Data-centric Machine Learning Research, (4):1−18.Roscher et al.'s paper on data-centric ML for EO proposes a set of questions to assess the quality of an EO dataset [3]. This differs from Seedat et al.'s because it doesn't differentiate between ML pipeline stages and focuses on the data. This checklist highlights some of the practical issues you have with real-world data.

[3] Roscher, R., Rußwurm, M., Gevaert, C., Kampffmeyer, M., Dos Santos, J. A., Vakalopoulou, M., ... & Tuia, D. (2024). Better, not just more: Data-centric machine learning for Earth observation. IEEE Geoscience and Remote Sensing Magazine.