August 20, 2024

The experimental details is one of the first parts of Machine Learning for Earth Observation (ML4EO) papers I read. This honest section tells me whether the authors' experiments can prove their claims. Experimental design determines which questions our experiments can answer.

Data descriptions sometimes get their own section. But don’t be fooled: how you prepare the data for an ML project is an important part of your experimental design. How you choose images, label them, and split the data. All change the interpretation of your results. ML papers don't teach much about preparing data because ML researchers often use benchmark datasets.

Benchmark datasets are large datasets that represent computer science problems. For example, the CIFAR-10 dataset has 60,000 images of animals and vehicles. It’s a general-purpose dataset for evaluating image classification networks. Benchmarks like CIFAR-10 often have pre-defined test splits. Everybody uses this same test data, making comparing your solution with others easier.

In ML4EO, we often have to make our datasets. Some datasets, like EuroSAT, do not pre-partition their data. You have to create training and test splits yourself. So how do you do that? And how do you take the characteristics of ML4EO data like geographical coordinates of images, into account? I wrote a blog about making ML4EO datasets a few weeks ago. In this blog, I go more in-depth on defining splits for ML4EO datasets.

We’ll talk about:

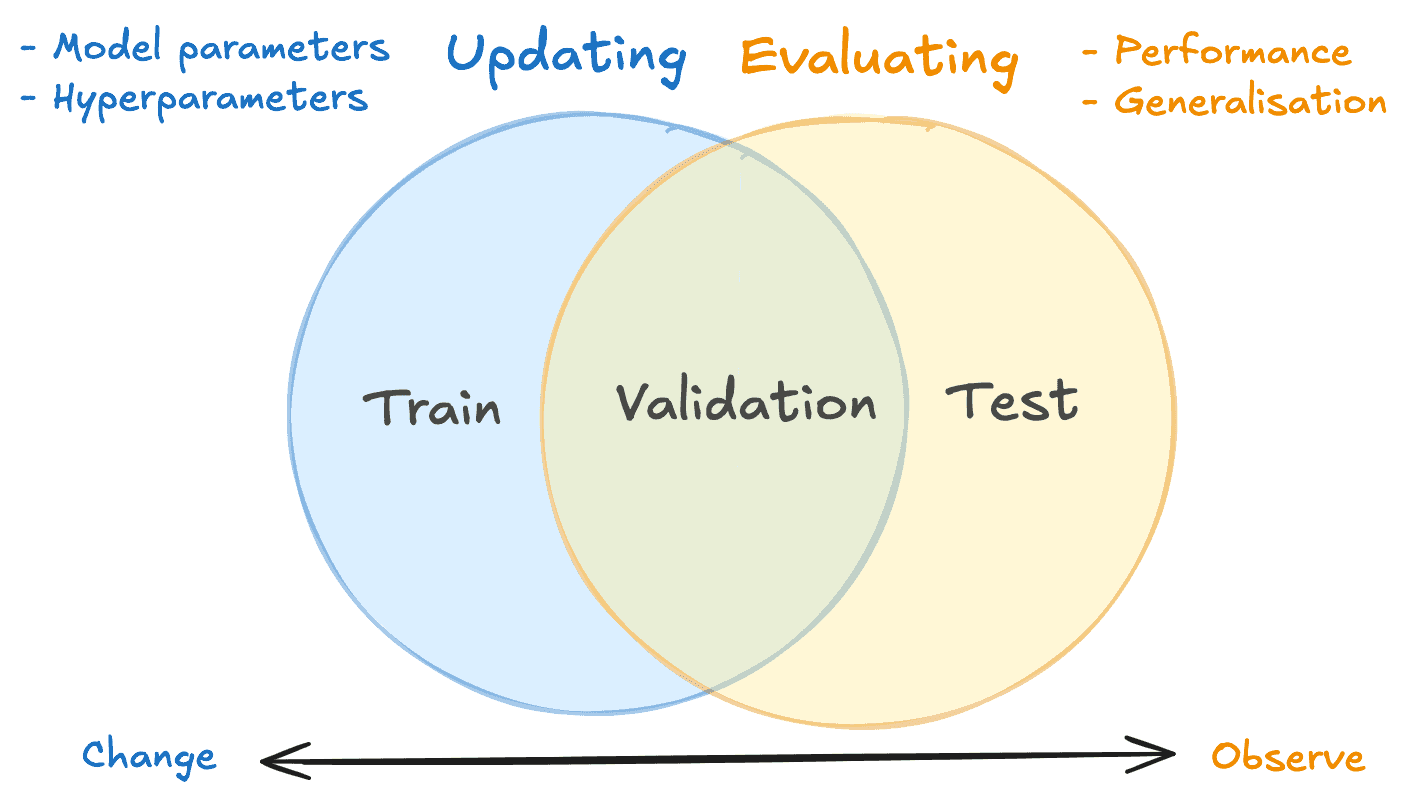

We split machine learning datasets into partitions with different functions. The three standard splits are:

Some partitions are for changing your design -- updating parameters. Some are for observing and evaluating your setup.

The training split updates the model's weights during training. So, training data is for updating only, not for evaluation.

We use the validation data both for updating and evaluating:

Then, we have the test set, which is not touched during training. With the test set, we estimate our model's performance “in the wild” compared to other models. We never use testing data for updating! This is why it's even better if you don't look at it during development. It's too likely you'll accidentally change your model based on what you see in the test results.

We can’t use training data for evaluation because this data shaped the model. The training data are the model’s homework assignments, while the test data is the final exam. You know how it feels to get a homework question on the exam. It’s a bonus: you can get it right by memorising all your homework instead of learning the theory. In the same way, ML test sets must be separate from the training data. How we define these splits changes what conclusions we can draw from the results.

Defining data splits is not much more than choosing a data sampling strategy. You need a set of rules for sorting data into the training, validation, or testing partition. The beauty of ML4EO data is the metadata we can use to create these rules, like the timestamp and location. We will look at an example of splitting the data by geographic location.

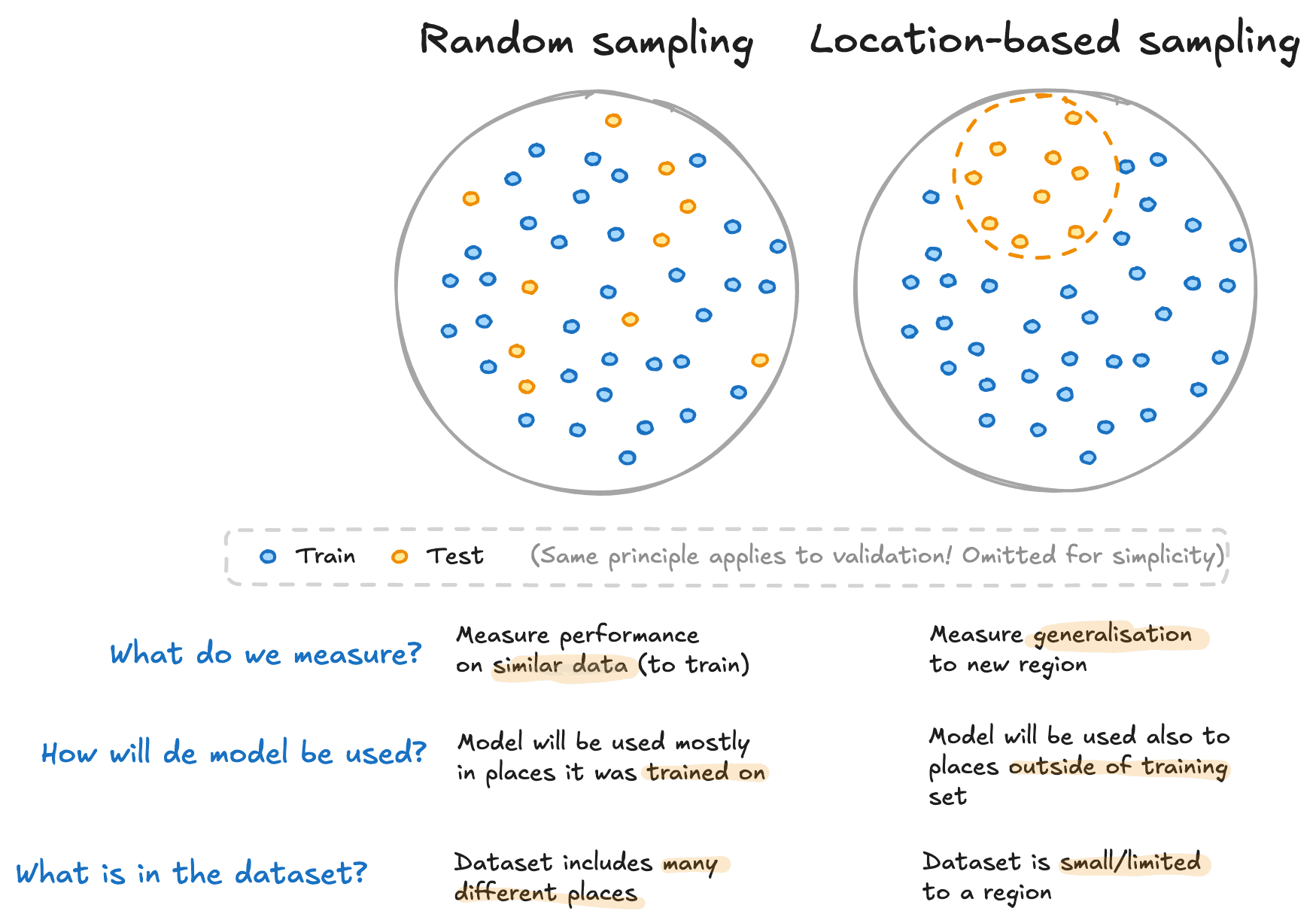

Say we have a dataset of satellite images from all over the world and we need to split it into training, validation, and test sets. Before choosing a sampling strategy, we need to ask ourselves three questions:

Now, let’s look at two sampling strategies and how they change the meaning of our results:

Random sampling is the most common sampling strategy for creating data splits. It does exactly what it says on the box: choose instances uniformly at random. Random sampling makes our training split more representative of the test set. We're more likely to get images from every part of the world in each data partition if we select random points. As a result, the test results measure the model's performance on similar data. This is useful if your dataset covers most of the regions you’re interested in.

The first law of geography states that everything near is more related than things that are far. Think about how the landscape changes from region to region. Or how houses in the same neighbourhood often have the same style. With random sampling, the images in the training and test sets correlate more. The model recognises the test samples because they look like the training data. As a result, the test scores are easier to predict, and the model performs better. However, this score doesn't reveal much about your model's performance in a region far from your training data.

You can solve this problem by sampling your data based on the location instead of randomly. Draw polygons or circles on the map where the training, validation, and test sets will be. Now, you will be training your model on one region and evaluating it on another. You'll learn how your model generalises to places it hasn't seen before.

Location-based sampling is also a good idea if your dataset is very small or covers a very small region. This is because you’re at greater risk of overfitting to that specific region. Splitting your data by location will help you measure generalisation a little better than with random sampling. But still: expect a drop in performance when you test on very different data.

Choosing the right sampling strategy helps us to get a more trusty evaluation. The gap in accuracy between random and location-based sampling can be very large. Traoré et al. (2021) find a difference of almost 30% in favour of random sampling. These types of results suggest the model does well on similar data but doesn't generalise as well to new places.

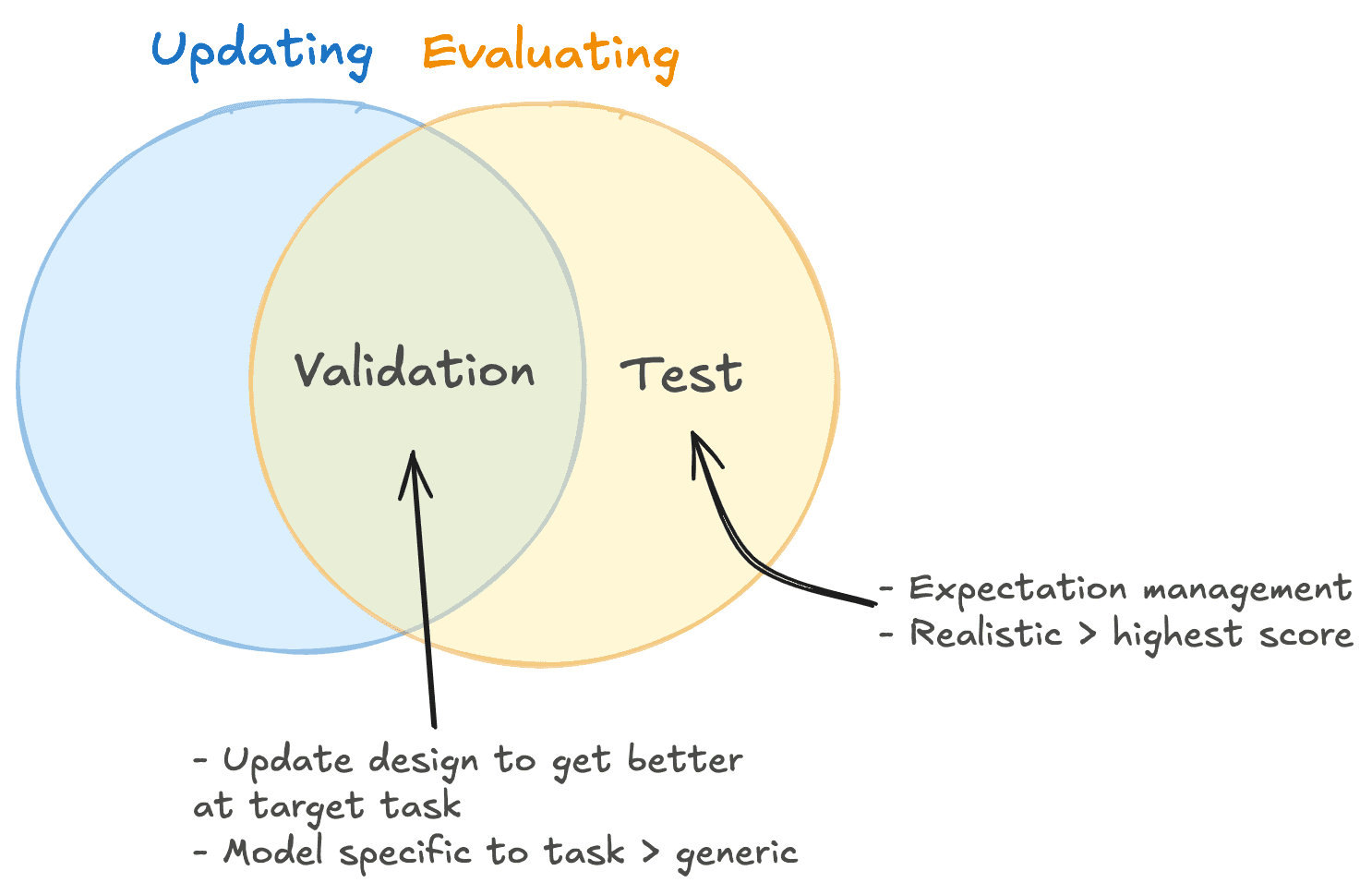

But there’s more to choosing the right sampling strategy than expectation management. It also helps with updating our model design. The magic happens when you use the same sampling strategy for all three splits. It’s all in the validation set we use to improve our model design. If the sampling strategy of the validation set aligns with our goal, we design our model for our specific goal instead of having higher accuracy. The right sampling strategy means we’re more likely to get the right model.

Finally, you can do much more than split by geographic coordinates. Depending on your goal, you can choose any other ML4EO metadata as a criterion. Like the timestamp, when your problem is dynamic (think of atmospheric data), or use multiple values if your data is complex. Don’t be afraid to find creative solutions using your domain knowledge.