May 16, 2024

Unlike in other countries, foreign movies in the Netherlands are not dubbed. We have Dutch subtitles instead. I learned that’s because there are not enough people speaking Dutch to earn back the investment of hiring voice actors. We have a similar problem with machine learning datasets for “niche” Earth Observation. Atmospheric plume detection, for example. If you work with satellite data, I don’t have to tell you how popular machine learning has become. Even Google is bringing out models for satellite data.

You need data in a specific format to develop machine learning models, like BigEarthNet and Functional Map of the World. The truth is that we don’t have many datasets for plenty of Earth Observation tasks. That’s because labelling is tricky, and unlike in cat-or-dog problems, there isn’t always a black-or-white answer. But what if you don’t want to do land cover classification or detect human-made structures? You can’t wait around for others to make a dataset. You need to make your own.

As I said, machine learning datasets need to be in a specific format. Bare minimum: we need images and labels of what’s in the images. A good dataset is the basis of a good model, but making a good dataset is not as simple as it sounds. You do not have to reinvent the wheel, though. Having a few machine-learning concepts in your toolbox goes a long way.

I will give you these tools so you can design your own ML4EO dataset. We will use them to build the three components we need for an ML dataset:

These machine learning concepts are helpful ways to think about dataset design choices. But just like we often don’t have ground-truth labels, there isn’t always a right answer.

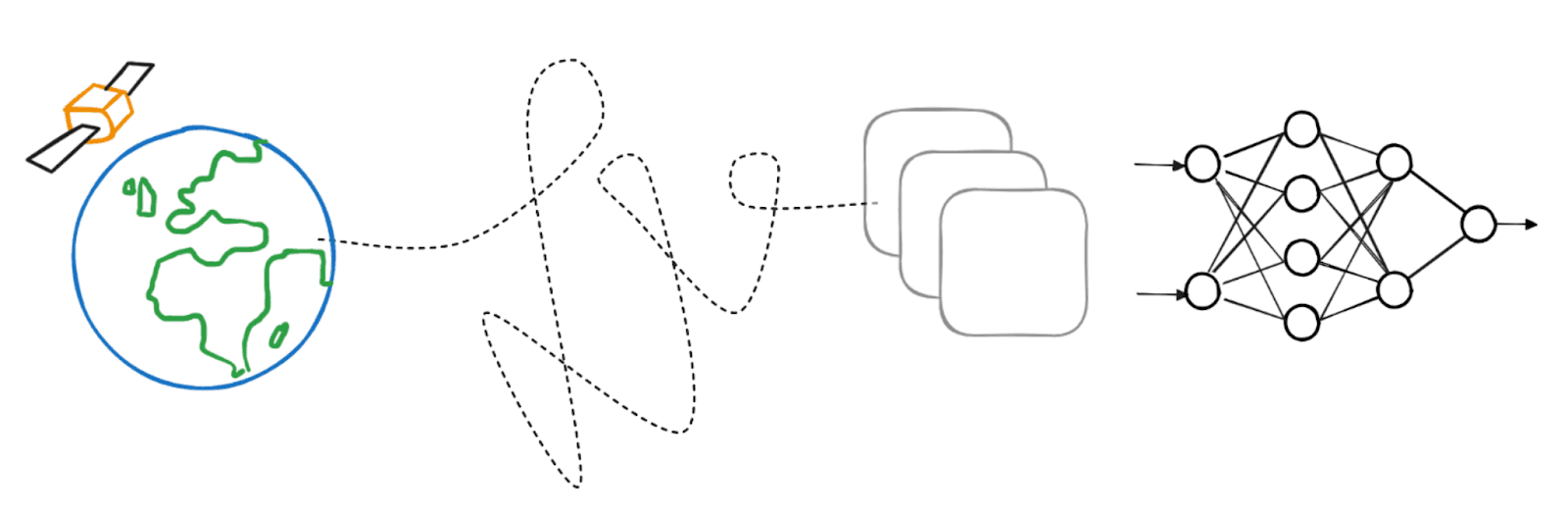

The first thing we need to do is find data that shows the concept we want to detect. Water wells, for instance. Sometimes, finding these images is harder than you think. A reasonable start is finding satellite images from places with water wells. But if you only know water wells in one village, you probably don’t have enough images. And what if the water wells in the next village look a bit different? Your model would not recognise them.

The problem is that neural networks are really bad at extrapolating. They only know what you show them. If you show water wells with a roof, the network will only recognise water wells with one.

So, we need to avoid our network having to extrapolate. The best way to do that is to make your dataset as representative as possible. That is tool number one in our belt. We have to strive to make a dataset as representative as we can. In our case, that means:

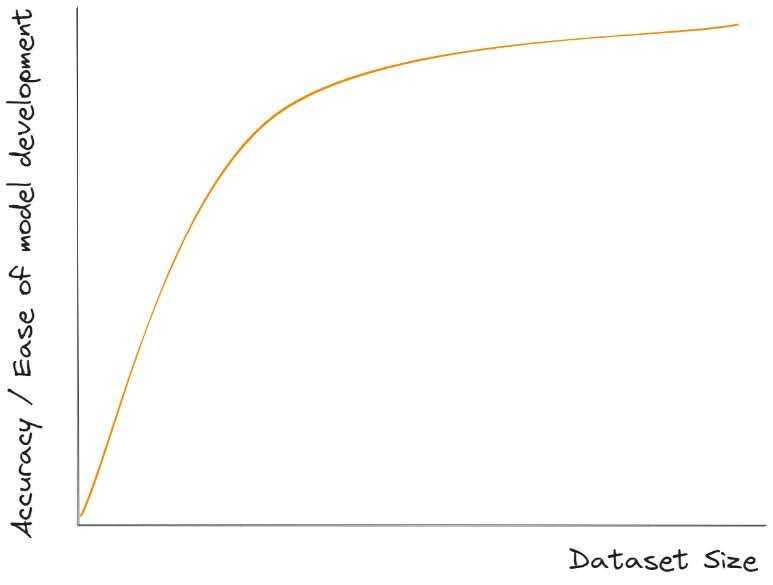

Now that we know which images we need, the big question is: How many do we need? How large should my dataset be? Because labelling is expensive, you’re looking for the smallest size you can get away with. The minimum you need to train a good model depends on how hard your task is, how good your labels are, and if there is class imbalance.

Finding the minimum size is a process of trial and error. There isn't always a straight answer. Overfitting can be the fault of a small dataset but also of model choice and training.

Instead of minimising your dataset, make your dataset as large as you can afford—the rule of thumb: the more, the better. More (high quality!) data will asymptotically increase the accuracy of your model. More importantly, it will make your life easier. We need all kinds of tricks to make models work for small datasets. It’s much easier when you have enough data.

Of course, there is a caveat. The representativeness of your dataset is important, but it is not the only challenge that warrants respect. In the real world, classes are rarely balanced. However, many machine learning models assume that your data is balanced. That means they expect as many images with wells as those without wells. If your dataset has many images without wells, your neural network will learn that it pays off to classify images as “not well.” Yeah, not well, indeed!

This problem is called class imbalance. Strategies to combat it, like setting loss weights, exist, but they usually don’t completely solve the problem. So, when selecting images for your dataset, you need to find a balance between representativeness and class balance.

In supervised learning, an image is never found without its partner: the label. The label tells the model what you want it to “see” in the image. Polishing your labels is worth your time: crap data in is crap predictions out. If your labels are full of errors, the label quality is low, and the model predictions won’t be good either.

Labels are like lessons you teach the model. Models aren’t the brightest students. You have to explain what a well looks like by providing the right images labelled as “water well”. And if you make a few mistakes, the model will get confused about what a well is and return all kinds of things that aren’t water wells.

Label quality determines the performance of your model. So, do the best you can to weed out mistakes. When there is no ground truth, you can leave out images where you’re not sure of the class—another possible trade-off is dataset size vs. label quality. You should know, though, that errors are common. Even ImageNet has faulty labels. So don’t beat yourself up for a few mistakes.

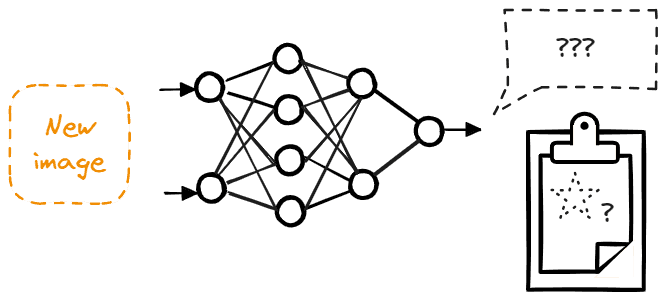

Now that we have a labelled dataset, the end goal comes back into view. We needed the dataset to train a model. The next step is to compare models so we can find the best one.

One strategy is to train your model using the entire dataset you made and then evaluate an area of interest. These predictions will give you detailed insight, but labelling and analysing them takes a long time. And every model you test will have slightly different predictions you would need to label.

Thank god there is an easier way. Instead of training models on the whole dataset, you hold out some images. You then use your trained models to predict labels for this hold-out set. This set of images is called a clean test set, and it’s a proxy for using your model “in real life” with images it has never seen. It’s an easy way to approximate your model’s ability to generalise.

What if you test your model on images it has already seen? A neural network will see each image in the training set during a training epoch. So, a trained model is deeply familiar with each image. It’ll predict labels for these images more confidently and accurately than unseen images. So, if you calculate test metrics based on images the model has already seen, you will be in for a nasty surprise once you use your model on unseen data. It will perform worse than you expected.

This is our final tool: create a clean test set for realistic evaluation.

We learned the three most important ML dataset components and got new tools to build them.

To select images, we use the following ML tools:

We create labels knowing that:

Finally, we find the best model for our dataset by:

There is one more vital component to making ML4EO datasets that I didn’t mention. When you have a machine learning background, you learn many of these things in university and by reading many papers. We have a lot of ML tools in our belts, but we don’t know all the nuances of applications—and people working with satellite data do!

Therefore, we cannot solve EO problems on our own without ML-ready datasets. If there’s no ML model for your important application yet, please consider making one. Even better, find an ML engineer and make one together.