November 10, 2024

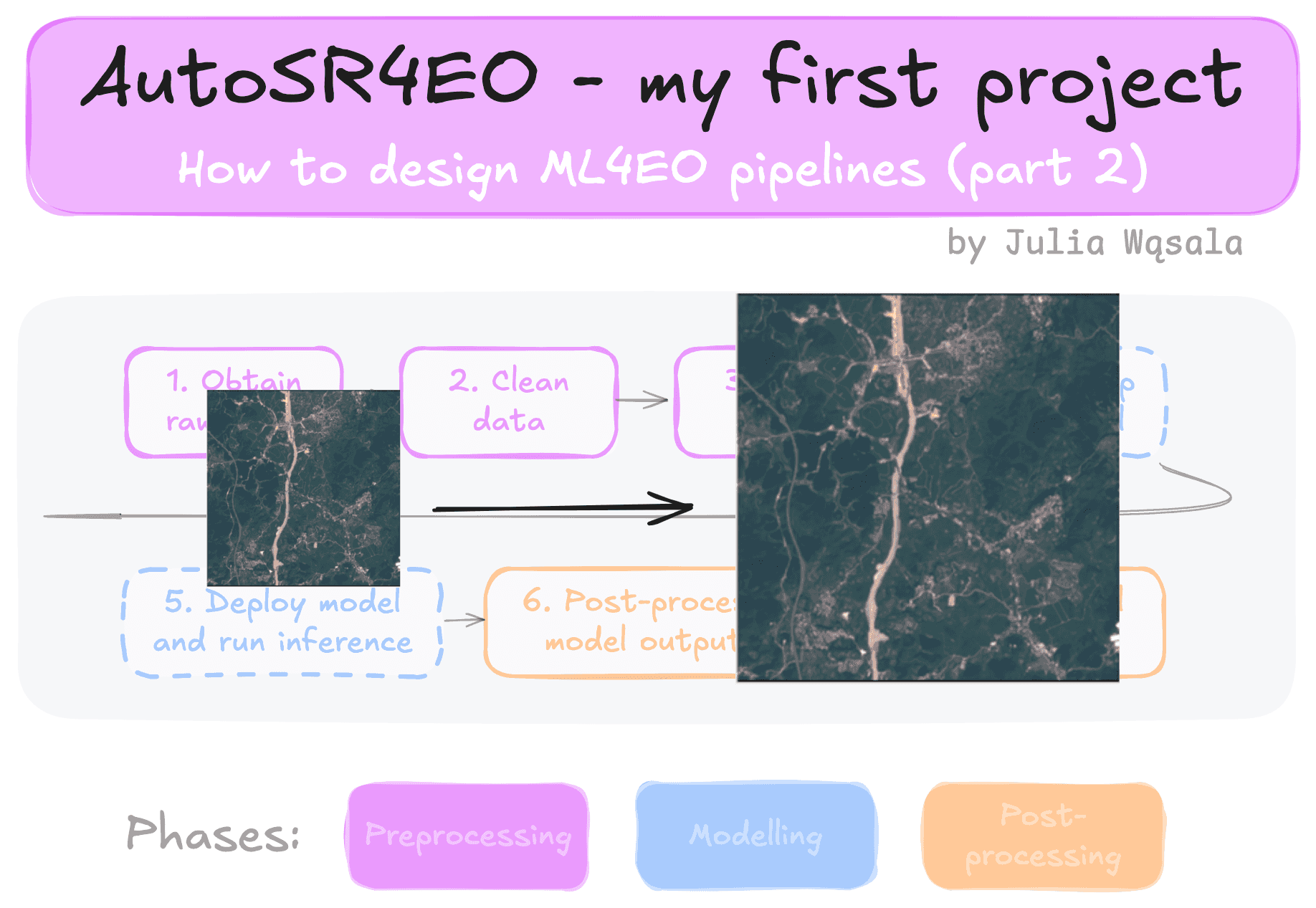

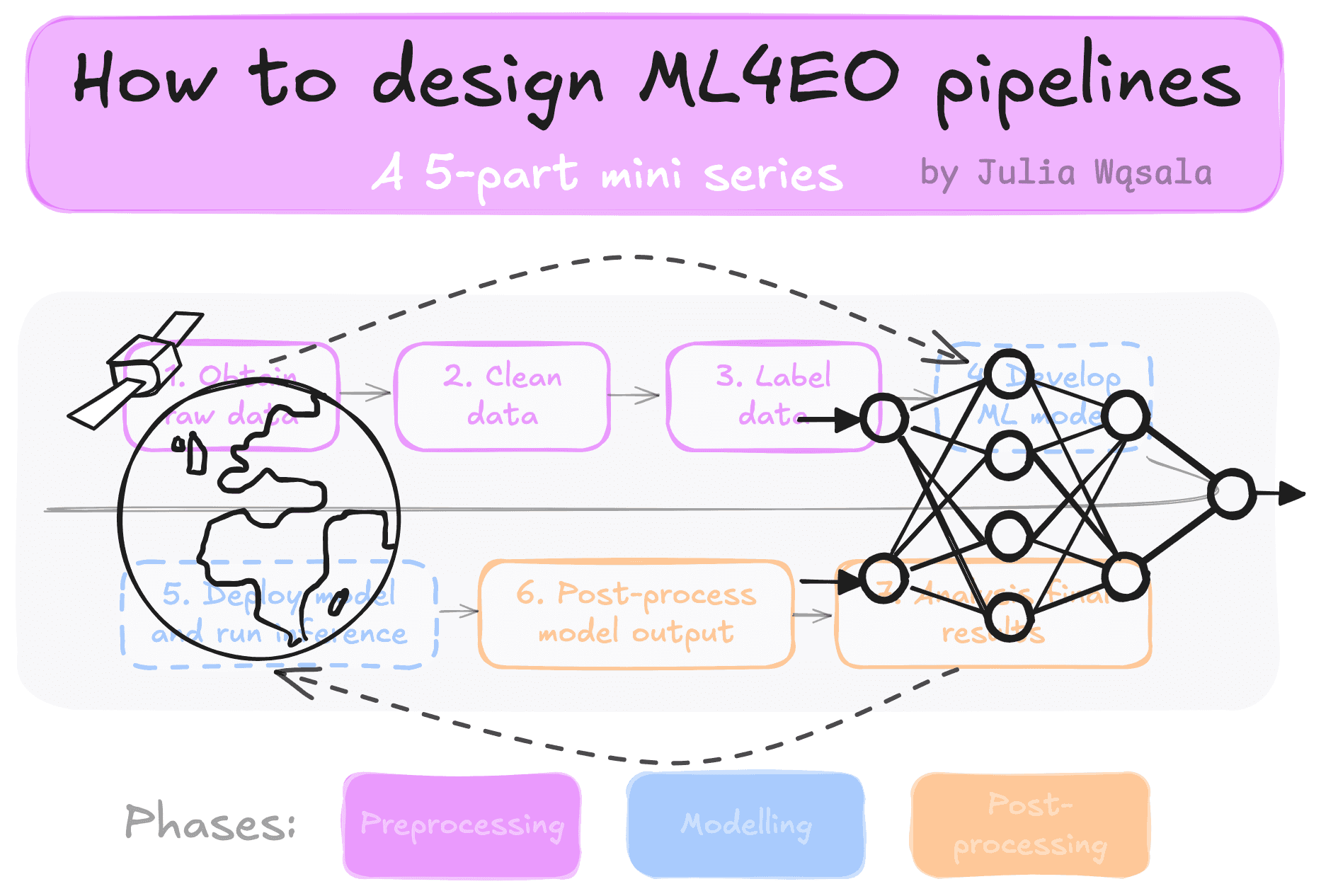

This is part 2 in the mini-series: practical challenges in starting Machine Learning for Earth Observation (ML4EO). Read the introduction here. This blog shares my first experience working with ML4EO data as a master’s student. I’ll describe step-by-step the pipeline for creating my first ML4EO dataset and summarise the skills I learned in this project. I’ll match them to the high-level steps in ML4EO pipelines.

My first time working with satellite data was during my master’s thesis at Leiden University. I was supervised by:

The goal of the project was to create an Automated Machine Learning (AutoML) model for super-resolution of satellite images. Super-resolution (SR) is a pre-processing task that increases the spatial resolution of an image. For this project, I used a few existing datasets from different sensors and built a dataset called SENT-NICFI.

The project lasted almost a year, from September until July. I continued working on the paper during my PhD. The paper was published in February; you can read it here.

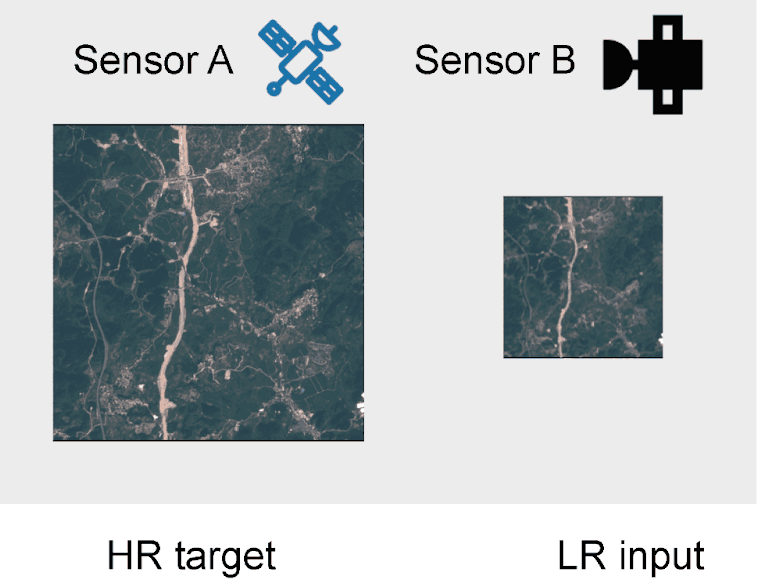

The main ML4EO challenge of this project was to create a new SR dataset. SR images consist of matching images showing the same place but different resolutions. You feed the network the low-resolution image; it’ll create a super-resolved image. Then, you evaluate the quality of the higher-resolution image by comparing it with your “original” high-resolution image.

The first step was to select two satellites: one with a lower spatial resolution for the low-resolution images and one with a higher resolution for the high-resolution reference image -- SR networks upscale images in integer factors (2,3,4x higher resolution). So, I needed to find two satellites with resolutions that differed by a factor of 2 or 3. At this point, I barely knew any satellites. I read a lot of papers and blogs to make a list of satellites with freely accessible data until I found a pair that matched my requirements: Sentinel-2 (10 m resolution) and PlanetScope (5m resolution). So, I was going to train my neural network with Sentinel-2 images as input and the higher-resolution PlanetScope images as targets.

The next step was to request access to the data. You need to create an account to download even free satellite data. Sentinel data is easy to access. Planetscope is a commercial satellite you usually pay for, but the open-source NICFI programme provides free access to some images. I also generated API keys to download data using Python.

Selecting images for the dataset was simple. Suzanne helped me choose five land types in Africa and find multiple images of each land type. I noted the IDs of each image from PlanetScope’s API. The plan was to first download the PlanetScope images. Then, I would use a Sentinel-2 toolbox to get matching images.

You can usually download satellite data in 2 ways: from their website (e.g., the Copernicus Browser that hosts Sentinel data) and accessing the API using a programming language like Python. I like the programming route because it’s more reproducible and easier to download large amounts of data.

APIs give you different ways to filter or search the data. For example, by date, coordinates, or unique identifiers of an image. I wrote a simple program to download the PlanetScope images directly onto the university cluster. I just had to select the right function for the data type. Quads, in my case. After I overcame my initial overwhelm with the API, downloading this data was easy peasy.

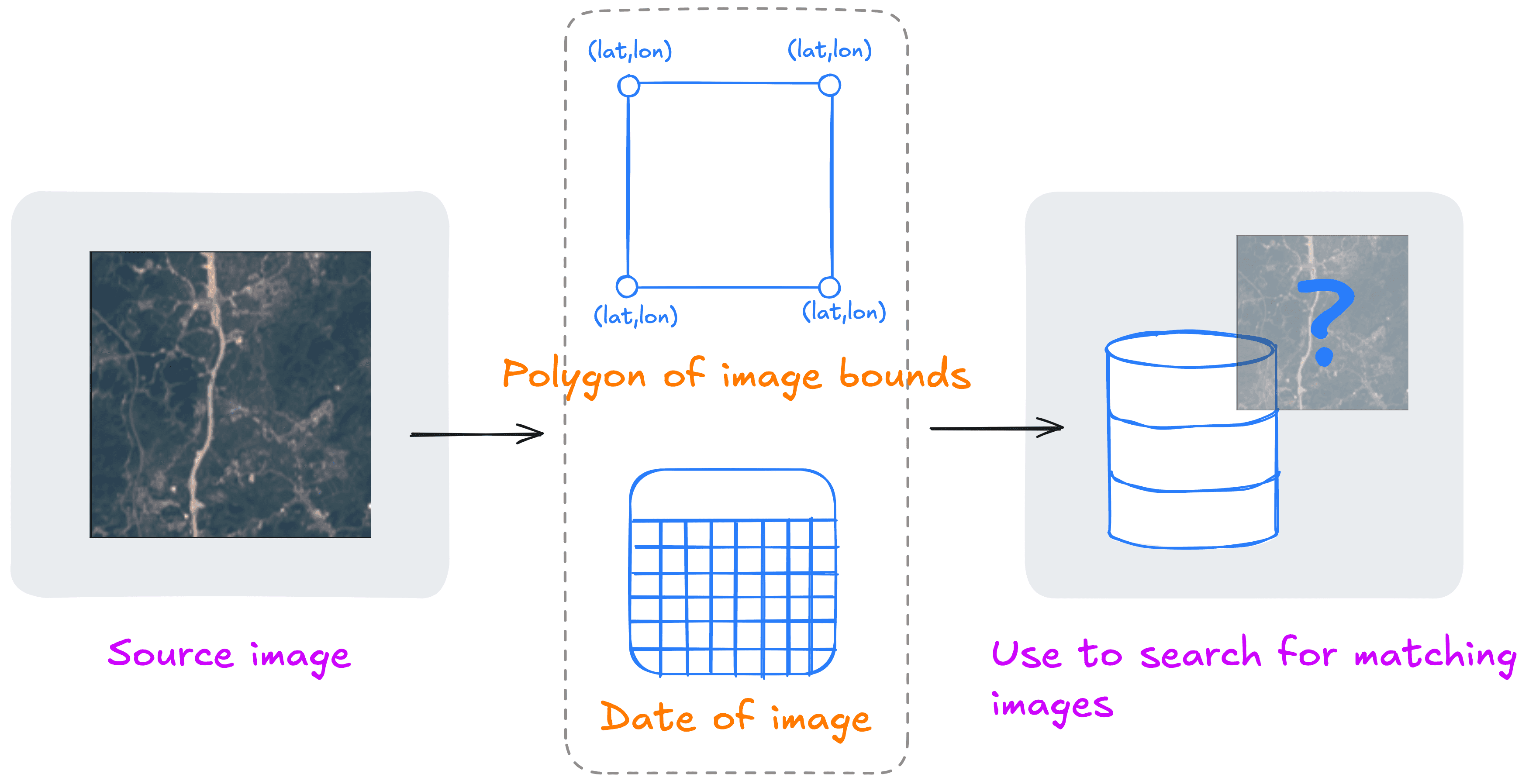

Next came the hardest part of downloading data: getting matching Sentinel-2 images. Matching means they had to cover the same spatial region, and the time difference between the PlanetScope and Sentinel-2 image was as small as possible. To match the images spatially, I extracted the bounds of the PlanetScope image as a polygon in latitude/longitude coordinates. To match the time, I programmed the dates from the PlanetScope timestamps and limited how much older or newer the Sentinel-2 image was allowed to be. I also added some filters like a maximum amount of clouds.

I first tried to write a script to download Sentinel-2 data with these polygons and dates, but the toolbox completely confused me. Fortunately, Gurvan sent me a script that I adapted for this purpose. In hindsight, it’s funny how short this script is: I toiled so hard on it!

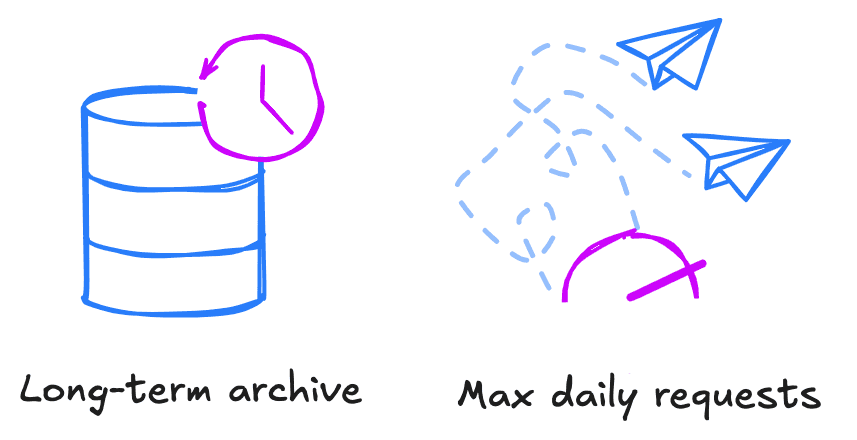

Where downloading the PlanetScope data was one and done, Sentinel-2 was a different story. This has to do with two factors related to download restrictions: the long-term archive and the maximum number of requests.

The twin Sentinel-2 satellites produce enormous volumes of data: the equivalent of 20 ImageNets per day! You can only download some of the images right away. Images older than 6 months are stored in the Long-Term Archive (LTA). If you ask to access that data, you must wait about 24 hours before downloading it.

Not only do you have to wait for some of the older data, you can only do 20 image requests per day. This is because Sentinel-2 data access is free, and I think it would be too expensive for them to have enough bandwidth to allow everyone to download unlimited data. However, I needed 30 tiles (the Sentinel-2 equivalent of PlanteScope quads), and the requests to the LTA also counted towards the limit.

Because of these two factors, my (temporary) morning routine was to re-run my script until I had no more LTA or download limit warnings.

When I checked the Sentinel-2 data downloaded by my script, I quickly discovered two things:

So I read the log files produced by the Sentinel-2 downloading script and learned that sometimes the script couldn’t find any image that was cloud-free enough. Suzanne pointed out that all these images were from around the equator, an area almost permanently covered by clouds! This was new to me.

I still don’t understand how the PlanetScope images could be so cloud-free (I didn’t notice the cloud problem before). I think they combine images from multiple days to get clean ones. In any case, I fixed the problem by selecting new quads further away from the equator. Then, I re-ran my Sentinel-2 download script.

Then, I looked at the quads with more than one matching image. The Sentinel-2 script downloaded all the images matching my criteria (polygon, date, clouds). I manually selected images based on the amount of clouds and how the colours matched. Now, I had complete pairs of PlanetScope-Sentinel-2 images and was ready for pre-processing.

Now, I was three steps away from having an ML-ready dataset:

As I said earlier, SR networks upscale images with integer factors. But when I printed the metadata of my satellite images with

Writing the resampling script was just like writing the download scripts. I struggled with finding the right functions, even a library that would do resampling. But the final script is straightforward! I think the problem was that I barely knew any terms related to geospatial data.

Next, the full quads and tiles were too large to fit into GPU memory. The PlanetScope quads are 4096x4096 pixels, compared to 469x387 in ImageNet. So I wrote a script that cropped the images to 100x100 (Sentinel-2) and 200x200 pixels (PlanetScope). The sizes are different because the resolutions are also different.

Finally, my supervisors mentioned that Sentinel-2 has very good calibration of the colours/bands. But I would teach my model to unlearn that calibration because I used PlanetScope images as targets. I addressed this by color-correcting the PlanetScope images with histogram matching. At the very least, this step reduces the domain shift between the two sets of satellite images. But very honestly, I don’t think I still fully understand the implications of calibration!

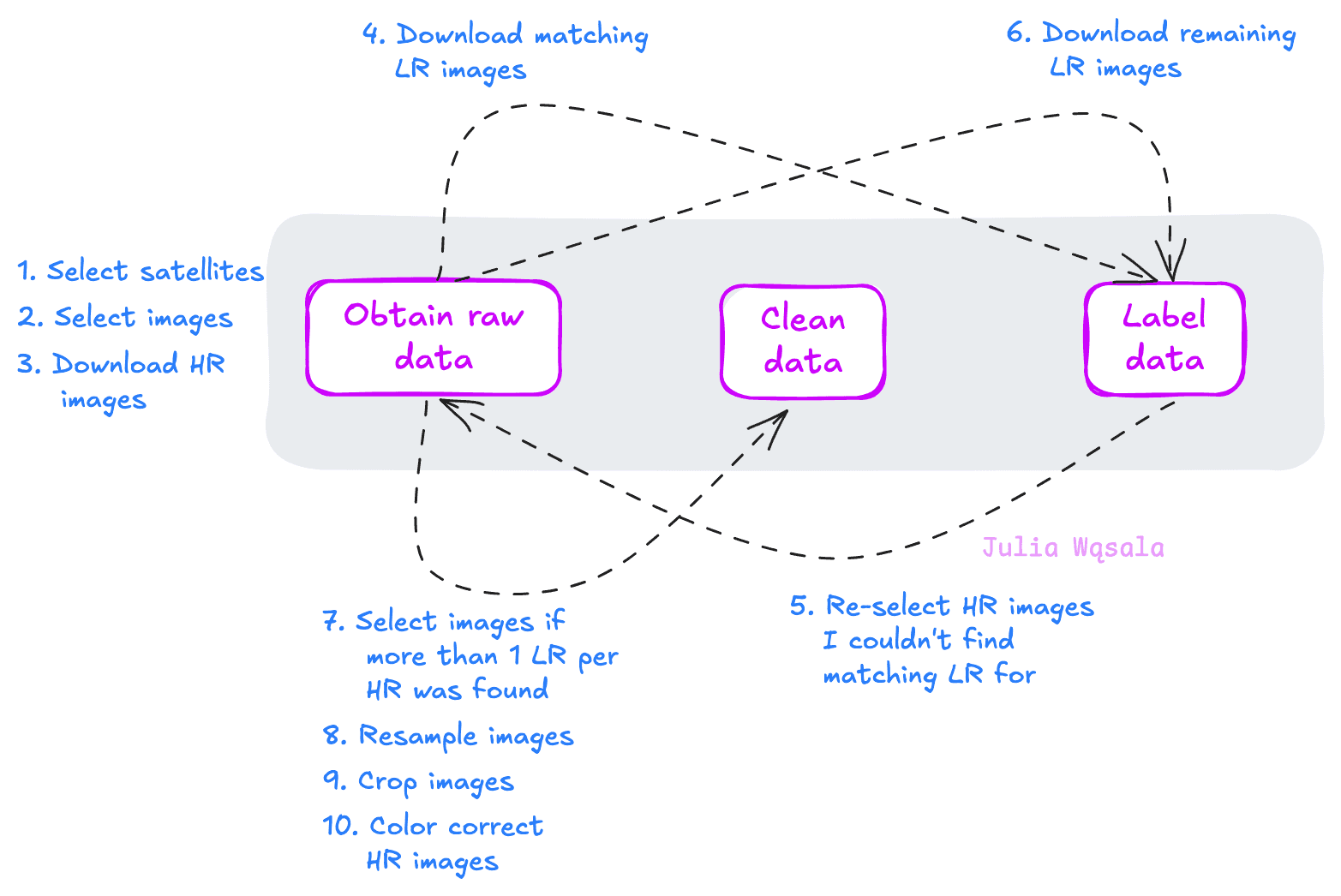

These are the steps in creating the dataset:

Written like this, you can see that the process isn’t entirely linear, but it isn’t that convoluted, either. Still, I had a hard time! I was buried in many new terms, concepts, and tools, starting from 0 with satellite data. As a result, my problems were very generic. I struggled with technology like libraries and common data problems like clouds. None of the steps, except maybe histogram matching, were specific to PlanetScope or Sentinel-2 data.

You need to overcome technical challenges like these before progressing to data-specific ones. Learning GDAL and running and re-running the Sentinel-2 download script filled my RAM. So, I couldn’t yet fully profit from Suzanne, Gurvan, and Nicolas's EO knowledge. But without them, I wouldn’t have:

Moreover, Gurvan and Nicolas’ SR expertise really helped me with the model, which is something I didn’t cover in this blog.

Interdisciplinary collaboration isn’t just a nice bonus. It gives you a more rounded understanding of the problem. It’s true ML4EO. (It also just saves you time…)

The bottom line: was it too much, all at once? Probably. But would I change it? No. I learned so much precisely because the project was so much. I was developing a new dataset whilst tinkering with AutoML model source code. Balancing data and model design: it’s a theme we’ll see again and again in this series. In the next post, I’ll write about making choices in a time-constrained client project.

BibTex for my paper:

@Article{WasEtAl24, AUTHOR = "Wasala, Julia and Marselis, Suzanne and Arp, Laurens and Hoos, Holger and Longépé, Nicolas and Baratchi, Mitra", TITLE = "AutoSR4EO: An AutoML Approach to Super-Resolution for Earth Observation Images", JOURNAL = "Remote Sensing", VOLUME = "16", YEAR = "2024", NUMBER = "3", ARTICLE-NUMBER = "443", URL = "https://www.mdpi.com/2072-4292/16/3/443", ISSN = "2072-4292", DOI = "10.3390/rs16030443"}