November 10, 2024

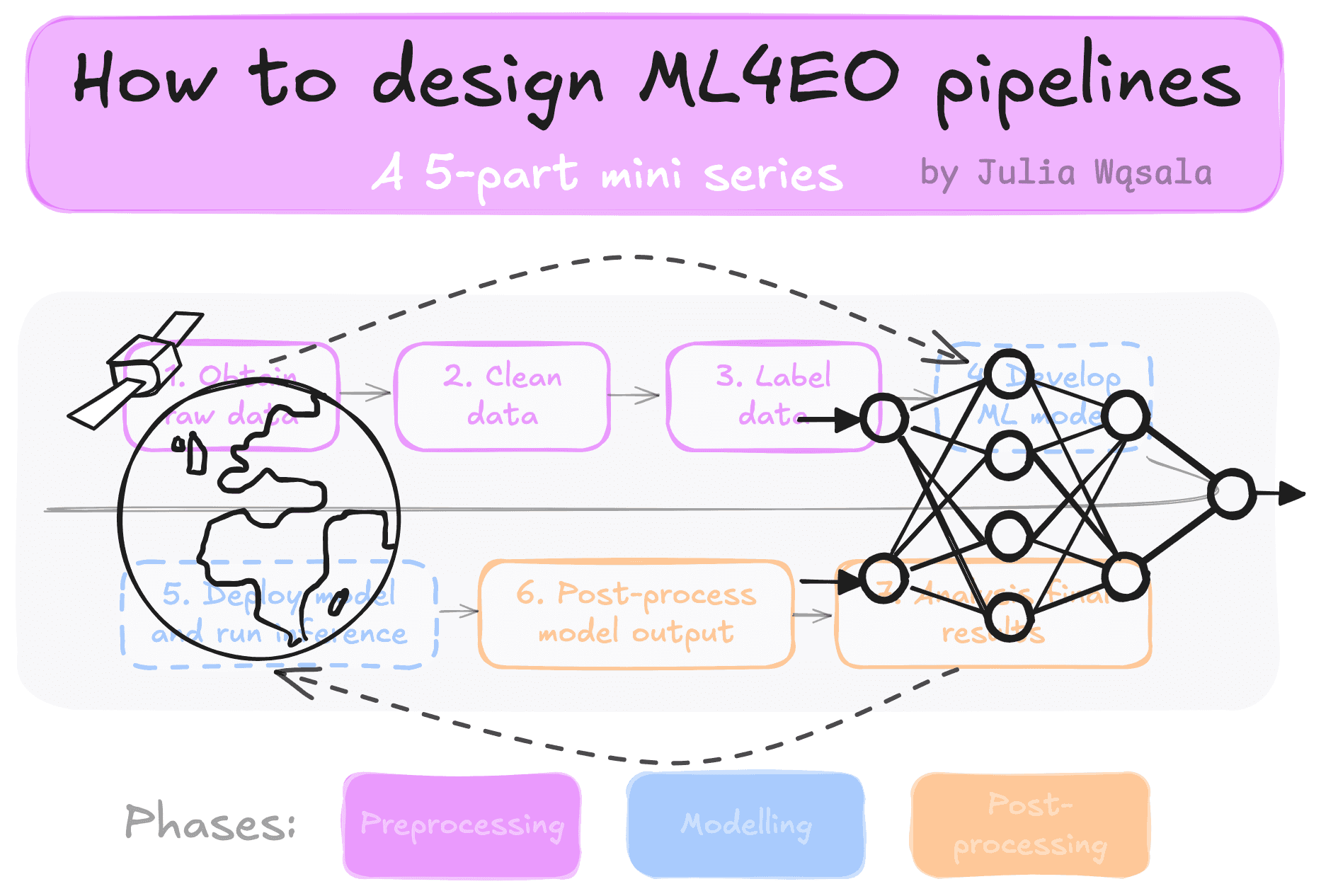

This is part 3 of the mini-series on starting in ML4EO. In the previous blog, I wrote about my first project with satellite data: my master’s thesis. In this blog, I write about my next experience: a freelance project with a data science company.

During my master’s thesis, I had to take a 6-month break from my job as IT support at the university. (Because of Dutch laws about temporary contracts). This was a great opportunity to look for a short job or project in ML4EO (Machine Learning for Earth Observation, which is a part of GeoAI). I found a cool company doing data science projects for satellite data: Space4Good. We got in touch, and that’s how I got my first freelance project as a remote sensing data analyst and ML engineer.

The major difference with my master’s thesis is that this project was on a smaller scale. Less time, less data. This impacted the ML4EO pipeline. In this blog, I’ll write about data preprocessing, choosing a model for small datasets and labelling detections.

I joined a project on drone-based latrine detection with Amref Flying Doctors, a charity committed to improving the lives of disadvantaged communities in Africa. Latrines are separate toilet buildings that indicate improved sanitation (v.s. not having a dedicated toilet).

AMREF is involved in the FINISH MONDIAL program. The program grants micro-loans to help locals start sanitation companies. We would help measure the programme’s success by counting new latrines. I was extremely excited about this opportunity because the whole reason I got into ML4EO was to solve real problems.

My role was to build a machine-learning model using drone data and a list of locations of latrines taken in situ by people in Uganda. I worked with Federico Franciamore from Space4Good. Later, another freelancer, David Swinkels, joined the project to help develop the model. The project lasted from February until May 2022, and I worked on it 1-2 days a week next to my Master’s thesis.

AMREF provided drone images of confirmed latrines and unannotated test regions. We also received a list of confirmed latrines' locations. Space4Good provided access to compute resources. I processed the data and trained a machine learning model to detect latrines in the unlabelled imagery.

The first step was to transform the raw data into an ML-ready dataset. I knew how to work with GDAL and QGIS (basically the graphical version of GDAL, where you can explore images). But this data wasn’t in the standard GeoTiff format, so we had to find and install software to open the images.

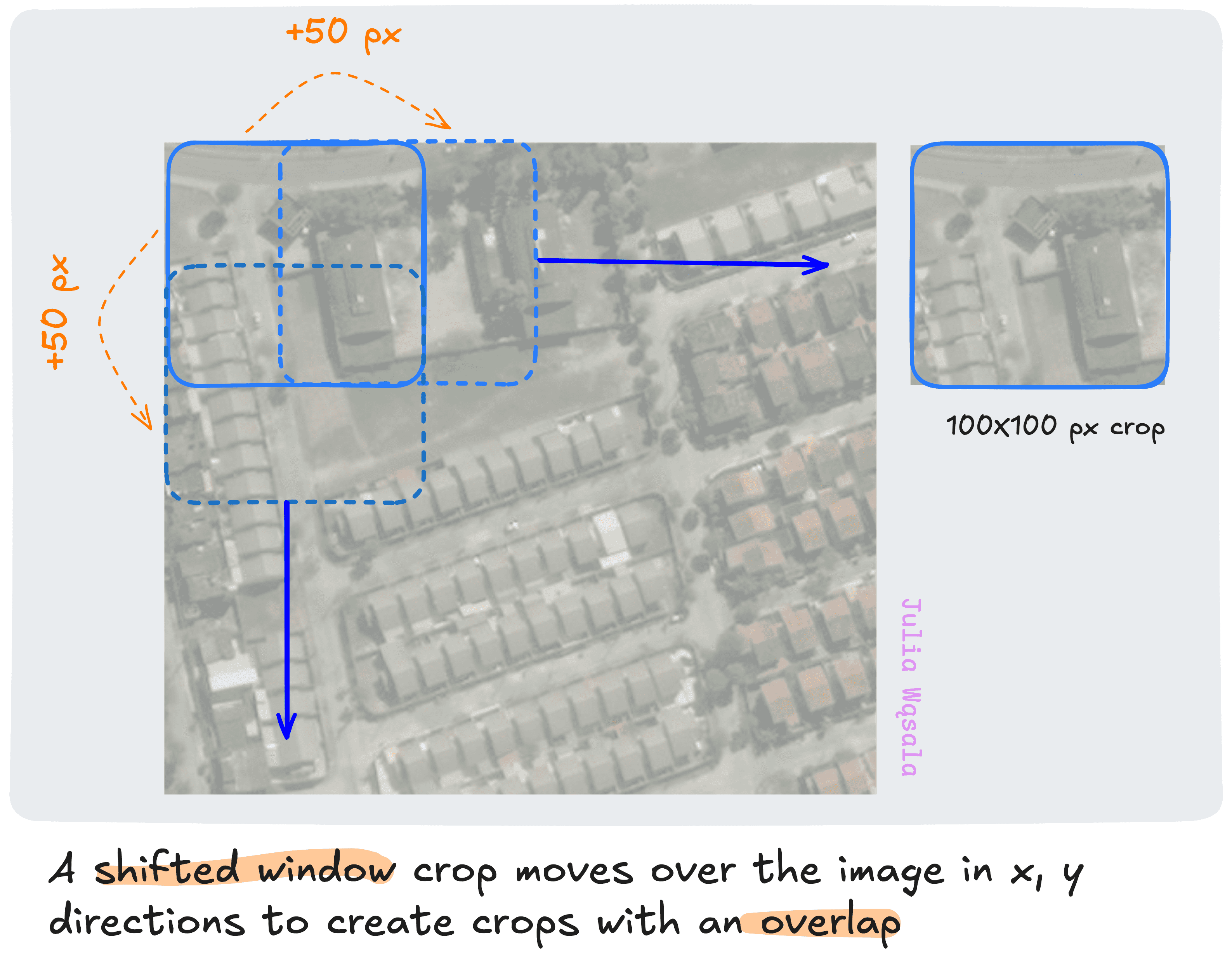

From then on, the processing was straightforward. Once the files were loaded, I mosaiced them into a single large file and cropped smaller images with a shifted window.

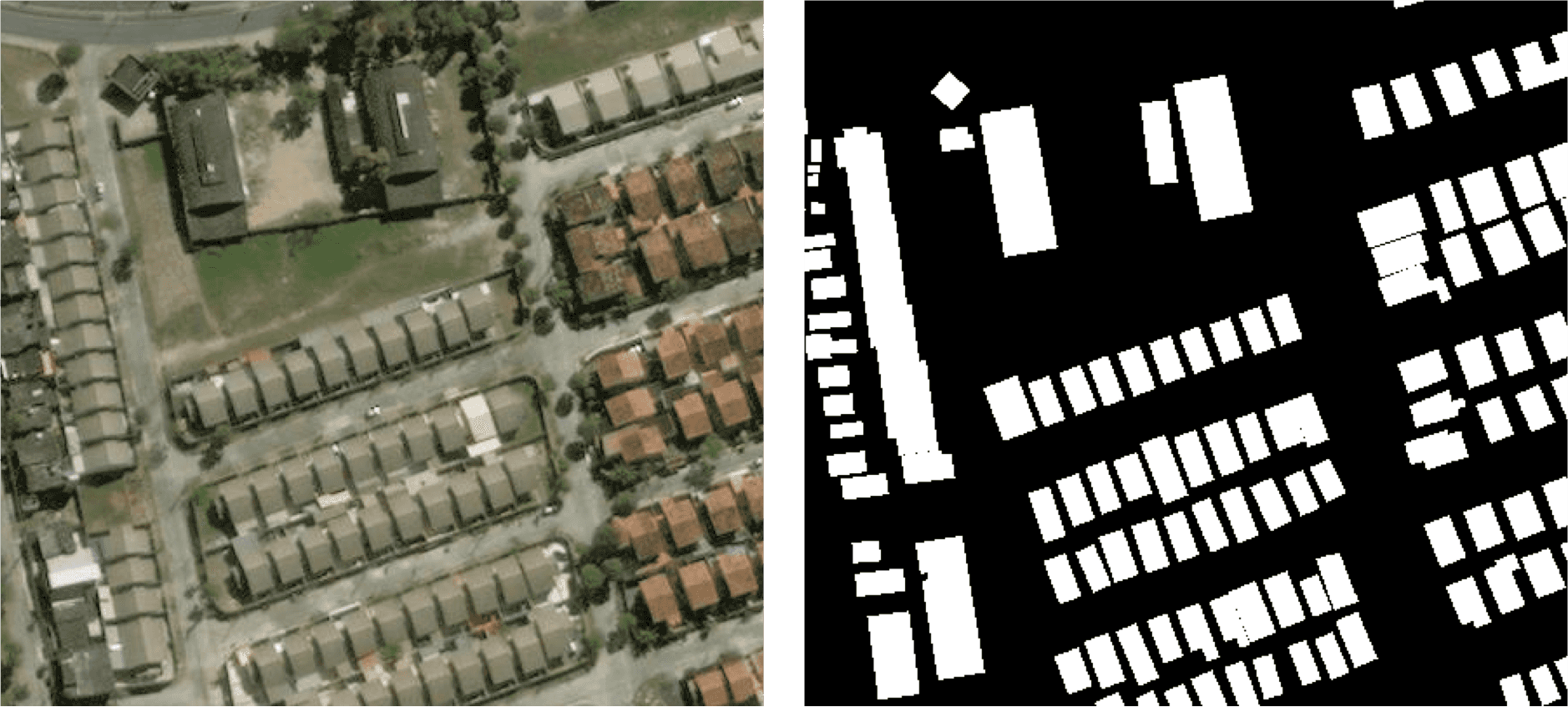

Finally, I plotted the images with overlaid points representing the confirmed latrines. I drew the polygons covering the roofs of the latrines. I exported these images as black-and-white masks or labels for semantic segmentation.

Creating the model for this task was a trial-and-error process. We didn’t have a lot of labelled data, nor did I find any other latrine detection project. The problem with the latrines is that it’s a pretty difficult problem. They are small rectangular buildings often detached from the house but sometimes attached. And they can have different roofs. So, because of how diverse the problem is, you would need a pretty large set.

In other words, we had to get creative.First, I decided on the deep learning library. I used Keras because it’s more end-to-end, and you don’t need to code everything from scratch. Usually, I use PyTorch because it’s the research standard and gives you more control. But I knew I wouldn't have time to mess with the model.

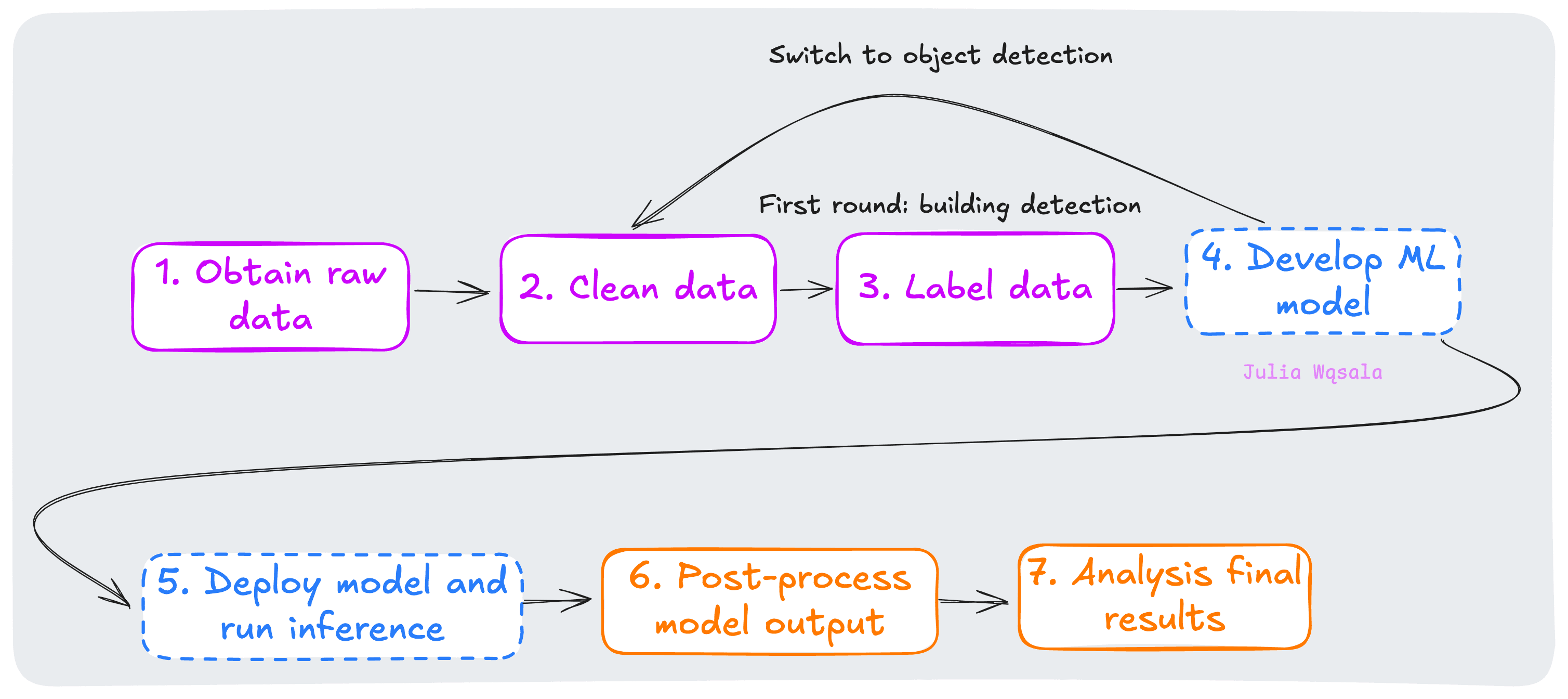

My first idea was to find a related labelled dataset, pre-train the model, and finally fine-tune it with the labelled latrine data. I immediately thought of building detection. Essentially, latrines are small buildings. It could work if we find a building detection dataset of the same resolution.

Photo Credit: GitHub user motokimura

Example image and mask from the SpaceNet7 building detection dataset

I found a few related datasets with different resolutions than the drone data. The model I selected would do OK in training, but it wouldn’t transfer well to the latrine data! I thought this was because of the difference in resolution. So, I tried resampling the data to different resolutions and re-training the model. It didn’t solve the problem.

I lost a lot of confidence because of the failures of the segmentation model. I was anxious about making the wrong decisions and struggled to create a path forward. Luckily, David joined the project around this time. Working together boosted my confidence and motivation, and we quickly got back on track. We got two key insights that led to the final solution:

Thinking ahead to the post-processing of the segmentation model, I realised that segmentation might not be the best idea. The problem was thus: say, we got a nice model that detected latrines in images fed to the model with a shifted window. The output is a bunch of segmentation masks: basically pixels. And this makes it really difficult to automatically count how many latrines you found because of three reasons:

I thought converting these segmentation masks to polygons would be easy, but research told me that wasn’t true. So, we had to change our course to object detection.

In my experience, the object detection model output is less noisy. The size of the bounding box may be a bit off, but you don’t get random tiny boxes as you get random pixels. I hadn’t looked into satellite image object detection datasets for pre-training. David proposed doing something simpler: using a big model pre-trained on natural images and fine-tuning it with our data. 1 step less than in my plan: we don’t pre-train a model ourselves; we just use another one.

We converted the masks I created into polygons and fine-tuned the model. Hooray-- it worked well right away!

Now, we had a high-performing model and fed the unlabelled images to the model. The model outputs were pixel coordinates of the bounding boxes relative to the cropped images. I mapped those pixel coordinates to latitude and longitude.

Photo Credit: Space4Good

Then, I wrote a deduplication algorithm to remove duplicate detections. I used a simple heuristic to determine when two polygons had to be merged. I manually tweaked the minimum separation until the results looked right.

Finally, we manually checked the model’s deduplicated detections to get our final results!

After finishing the project, I went to Space4Good in person for the first time. I met Federico and a few AMREF representatives. Contributing to a project for a humanitarian organisation like that was really special. And even more to get to meet some of the people involved.

To summarise, these were the steps I took:

As you can see, there is quite some looping back, but nothing crazy complicated! From this project, I learned two lessons:

My research instinct got the best of me at first. I thought we needed to pre-train on highly related data because EO images differ from natural data. And I thought we would need extra tricks because latrines are relatively harder to detect than buildings. But we weren’t creating the next ResNet here; we were creating a solution for a very specific problem.

Second, I doubted myself because my initial plan didn’t work out. I thought I didn’t know enough about EO data to train a model in such a short time. But the challenges I encountered weren’t because I lacked knowledge about EO data. The data pre- and post-processing went fine! The answer was choosing another model, which had nothing to do with satellite images at all.

Looking back, this was a pretty unique experience. Standard techniques often don't transfer well to satellite images. I’ve never encountered another case where fine-tuning a pre-trained model was the best solution. In every other project, I implemented some data-specific solution. And, boy, did I try a lot of things in my PhD. Look out for the next blog to read about it!