November 10, 2024

Data version control (DVC) is a powerful Python library for managing data processing steps in ML pipelines. It is actively maintained and has extensive documentation. Still, it took me over a year to finally get it working. Realistic pipelines are often more complicated than tutorial examples. In this blog, I share nine tips for setting up DVC in projects with lots of data.

Outline:

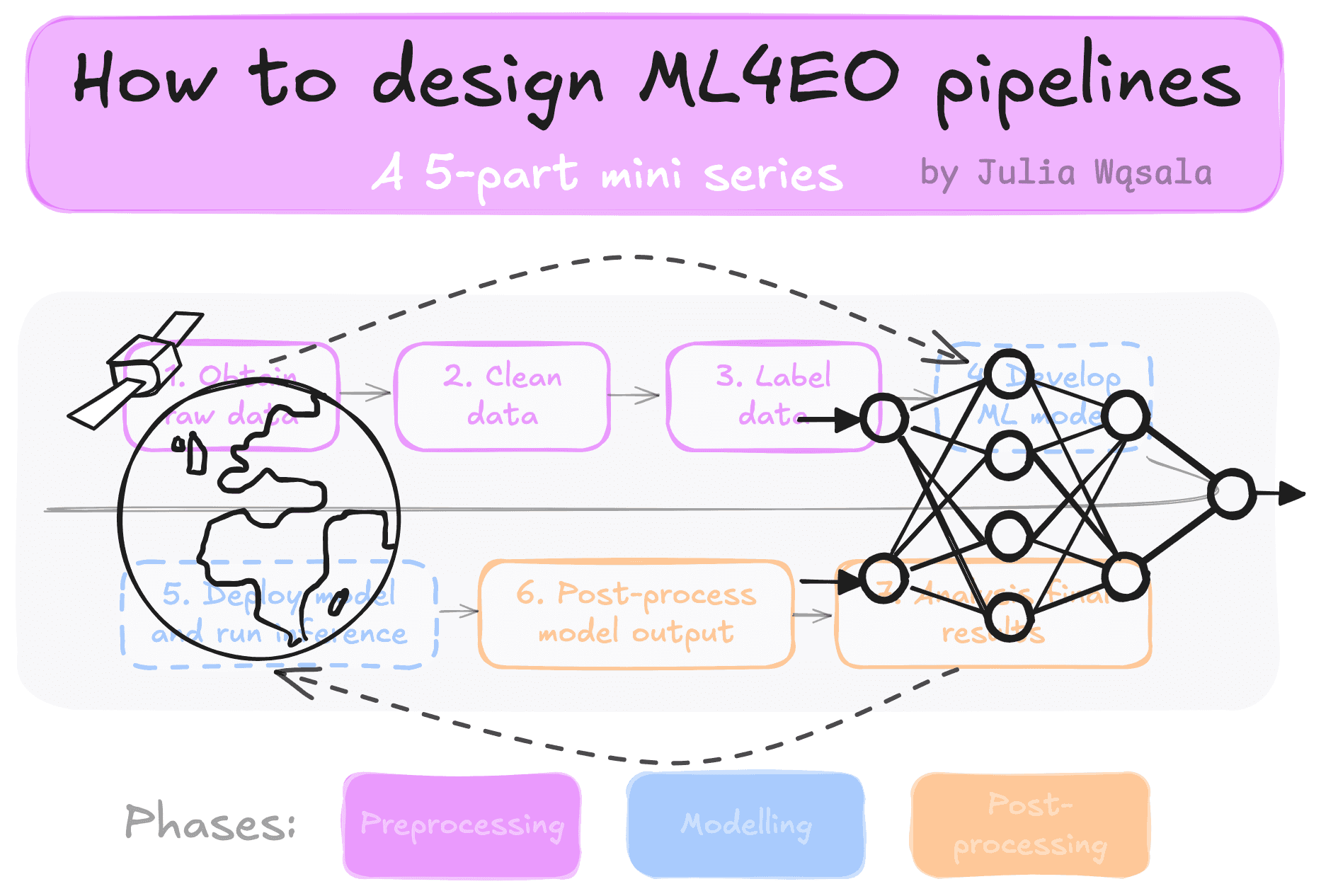

Have you ever had to rerun experiments because you forgot to rerun a data pre-processing step? I need multiple pre-processing steps: filtering, cropping, normalising, splitting, etc. Sometimes, creating ML datasets is just as complex as designing the model. Most of us already use tools like TensorBoard and Weights & Biases to keep track of model design. So, what about our data?

The problem with versioning data is that we cannot push large files or thousands of images to our GitHub repositories. But nothing is as frustrating as discovering that you ran your experiments with an outdated or incorrect data version. You can sort of version data by updating the filename: appending “normalised” or “cropped.” This mechanism has failed me many times, and I, for one, am tired of discarding results because of data problems!

Versioning your data can solve many problems, especially if you link to code versions. Imagine an automated way to say: this data was made with this normalisation procedure and this filtering step. It removes reliance on hardcoded and, let’s face it, arbitrary filenames. As a result, your code will be less bug-sensitive and more reproducible.

As I mentioned, versioning data is harder than versioning code because data files are much larger. Moreover, most of the time, we don’t just want to know the version of input, intermediate and processed data but also how they are linked. Many data processing bugs stem from forgetting to re-run some pipeline parts after changing them. DVC uses a simple trick to assign versions and links to large files. When you track a piece of data, DVC creates a unique string based on the file's contents. Just a single change to the file can change the string completely. This process is also called hashing. The links between data and scripts are defined in YAML files and stored in your repository and pushed to git.

With these two types of files, a single status check can show you if you:

Versioning data in DVC goes in three steps:

dvc add: compute hashes for the data and save them in files tracked by git. You can add data to the project a few different ways (see Adding data).dvc commit: move or copy the data to the cache and link the data to the working directory.dvc push (optional): upload data to remote storage for backup or collaboration.The idea is simple. But if you have a lot of files, you may not be able to follow the DVC tutorial step by step. If you don’t do it right, it can be very slow or waste space. DVC has all the functionality you need to prevent this, but it can be tricky to find in the documentation. (It took me a lot of trial and error). I give you nine tips you should read before adding DVC to your own project.

My tips supplement the official DVC tutorial: “Get Started: Data Pipelines.” The tips are in chronological order and help you:

Before you add any data, consider how to set up your cache. When you commit data, DVC moves it to the cache, which can grow large and hold all the committed versions of your datasets.

By default, DVC saves the cache in your home directory. You can change the default location to a different path if your home directory does not have enough space. You have two options to configure the cache location:

You can update the configuration using the DVC CLI:

# global cachedvc config cache.dir <filepath> # local cachedvc config –local cache.dir <filepath>DVC doesn’t keep duplicates of your data. It uses the unique hash to check if the data is already in the cache. However, if you make just one small change in a large file, the hash will change. As a result, DVC will store a copy. So, saving your data in multiple small files is better. DVC will only save duplicates of individual files that have changed instead of the entire dataset.

Having many small files does create some problems with using remote storage, so you’ll need to weigh the pros and cons.

DVC can download and upload cached data to remote storage. It’s a useful single source of truth when collaborating and a backup, too. The

DVC has many options for adding remotes. However, I don’t think using a remote is always necessary or the right choice. The problem is how the data is pushed to the remote. DVC reviews each file one by one and then communicates with the remote storage to check if it needs to be uploaded. I used WebDAV to synchronise a few thousand images with SURFDrive (part of the national Dutch universitary compute services). It was so slow the operation timed out!

You can speed pushing and pulling up by reducing the number of files, for example, by creating tarballs. However, then you run into the cache problem with large files. I don’t collaborate on my code, so remote storage wasn’t worth the hassle for me.

The DVC tutorial shows how to track a single .csv dataset. We need some tricks to add data in realistic projects with many more inputs, intermediate data and outputs.

There are three ways you can track data:

dvc add: add a “unit” of data to the versioning system. This can be an individual file or a whole folder. DVC assumes this is just one brick of data that is always created at the same time.dvc stage add: add a stage to the versioning system, linking inputs and outputs to a specific script. This approach gives DVC more context about how you created data. DVC saves the dependencies in a dvc.yaml file containing all the stages.dvc.yaml: this is the most flexible option. While dvc stage add is already pretty powerful, you can also manually add parametrised stages to the dvc.yaml file. For example, run the same script with different arguments, or plot results.The best option is to manually edit the

It’s fine if you still want to use

If you run

I store code in the home directory and data in a larger partition (like the

Another thing to remember when adding data: you can only add already existing data. So first run your data processing script, then add the outputs to DVC. There used to be a command called

You update data as you test, debug, and fine-tune your processing in any standard ML setting. I recommend only tracking data once you’re reasonably sure it’s in a good state to avoid storing incomplete data in cache. Your data will still change, though. Either you find a bug, or something to improve.

DVC protects tracked data so you can’t just overwrite it. I explain how to make changes to tracked data in two scenarios:

To update an existing file, run:

dvc unprotect <filepath>python <yourscript.py># if adding data with dvc add. You don’t need add when using dvc.yamldvc add <filepath>dvc commit -m “<your commit message>”

To replace a file or folder, run:

# you can remove individual files, or whole stagesdvc remove <filepath or stage name># if replacing a directory, I recommend to first remove it so you dont accidentally keep old filesrm -rf <filepath>python <yourscript.py># if adding data with dvc add. Else, you need to manually add the stage to the dvc.yaml file againdvc add <filepath>dvc commit -m “<your commit message>”

Use

aws s3 using a simple dataset as example.DVC can do more than data pipeline versioning:

Another big question is how to make it work with slurm, the only way for me to access serious compute. Some libraries connect to commercial services like aws, but what if your group has its own cluster? And is the library able to deal with the parallelism that comes from launching many slurm jobs at the same time? For example, I haven’t yet figured out if starting parallel

Some people propose custom solutions, like this wrapper around DVC to submit jobs to slurm. However, they have their own challenges. This particular package assumes you launch slurm jobs over SSH. None of the academic clusters I know work like this: you always submit jobs from the head node. Furthermore, installing extra packages means more potential python package dependency conflicts and more time investment in learning to use the packages.

I’m hopeful that the experiment tools (=MLOps) landscape will improve for researchers. Fora and Github Issue pages are full of researchers asking “ok but how does it work with slurm?” More and more researchers that create their own toolboxes, like NASLib and OpenML. At some point, commercial software will pick this up and start designing for us, too.