September 7, 2024

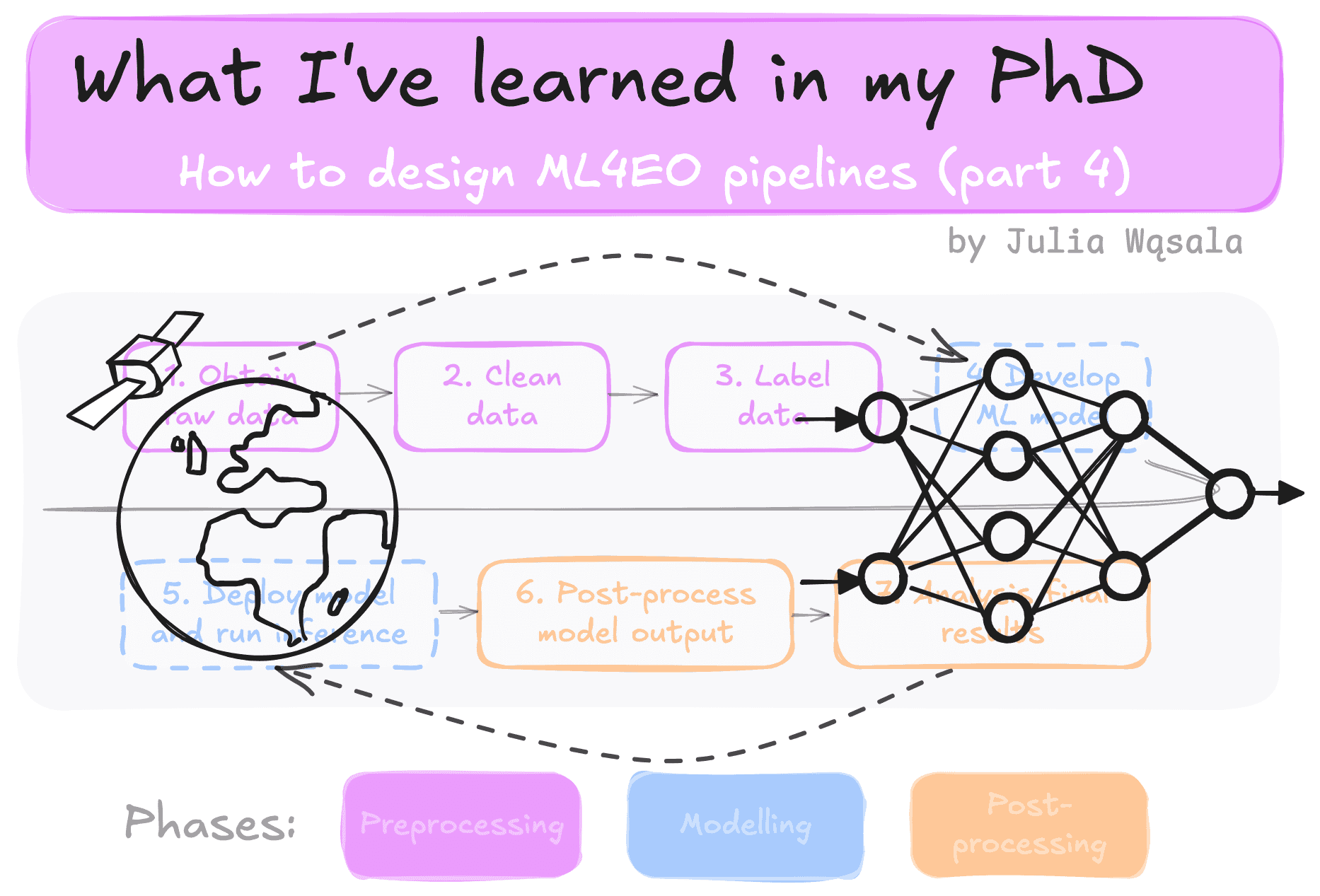

This blog is part 4 of the miniseries on starting Machine Learning for Earth Observation (ML4EO). In the previous post, I talked about my first freelance project. Here, I share what I’ve learned about ML4EO in the first two years of my PhD. It’s our last stop in the stories about my hands-on experiences.

In these two years, I finished up the paper for my master’s thesis, and I worked on my first PhD research project. It took me a little over two years, from start to end, to submit the paper.

Because this project was so much longer than the others, I tried many, many more steps. So, a bit differently from the previous two blogs, I’ll give a more high-level overview of the steps I went through to design this ML4EO pipeline.

My PhD is shared between the Leiden Institute for Applied Computer Science (LIACS) at Leiden University and the SRON Netherlands Institute for Space Research. I also collaborate with the ESA Φ-lab. In practice, I have supervisors from LIACS and SRON, and my contact at Φ-lab is like an advisor.

My first PhD project basically started with another PhD student’s research. SRON was just publishing a paper on detecting methane plumes in TROPOMI data with ML. Read the paper here and the interview with the first author, Berend, here.

Plume detection in atmospheric data is hard because the data sometimes shows patterns that look like plumes but are something else. You need to add extra data (like cloud masks or surface pressure) to avoid these patterns showing up as false positives.

In the existing pipeline, this problem is solved by engineering features using these extra data layers. Because these features are specific to methane, you can’t use these methods directly for other gases like nitrogen dioxide. My idea was to use Automated Machine Learning (AutoML) to automatically create models for other gases.

Before tweaking anything, I had to get a good grip on the problem. I read some book chapters and papers about atmospheric science recommended by my supervisor. I read Berend’s paper and asked him a lot of questions. I also went through his code line by line, trying to understand every single step. I plotted the data (there was already a labelled dataset) and calculated summary statistics like minimum, maximum and mean pixel values of different data layers.

Atmospheric plume detection was a whole new field for me, and I was trying to understand the main concepts and problems. My goal was to identify some challenges that could be linked to ML problems like data fusion. Then, once I got an idea of related ML problems, I read a bunch of papers on those topics to develop some methodology ideas.

I designed experiments to explore different architectures; I identified a few baseline networks. But I hadn’t trained or evaluated any networks yet. So, I first had to run some exploratory experiments to get an intuition of whether my methods would work.

To test my hypothesis, I started tweaking parts of the pipeline. This part wasn’t terribly exciting, but it was an important part. I couldn’t jump to the most fancy pipeline right away. I had a pretty elaborate Neural Architecture Search (NAS) method in mind. But I had to first test simpler solutions before using NAS. I built my experiments up like this:

These results built confidence in my methods, so I implemented my NAS methods I designed at the start. Then, I ran a lot of ablations to test different parts of my methods.

Early on, it became pretty clear that I wanted to test my model in a realistic setting, also called a use-case application. There were some important differences between the labelled data and “real-world” data:

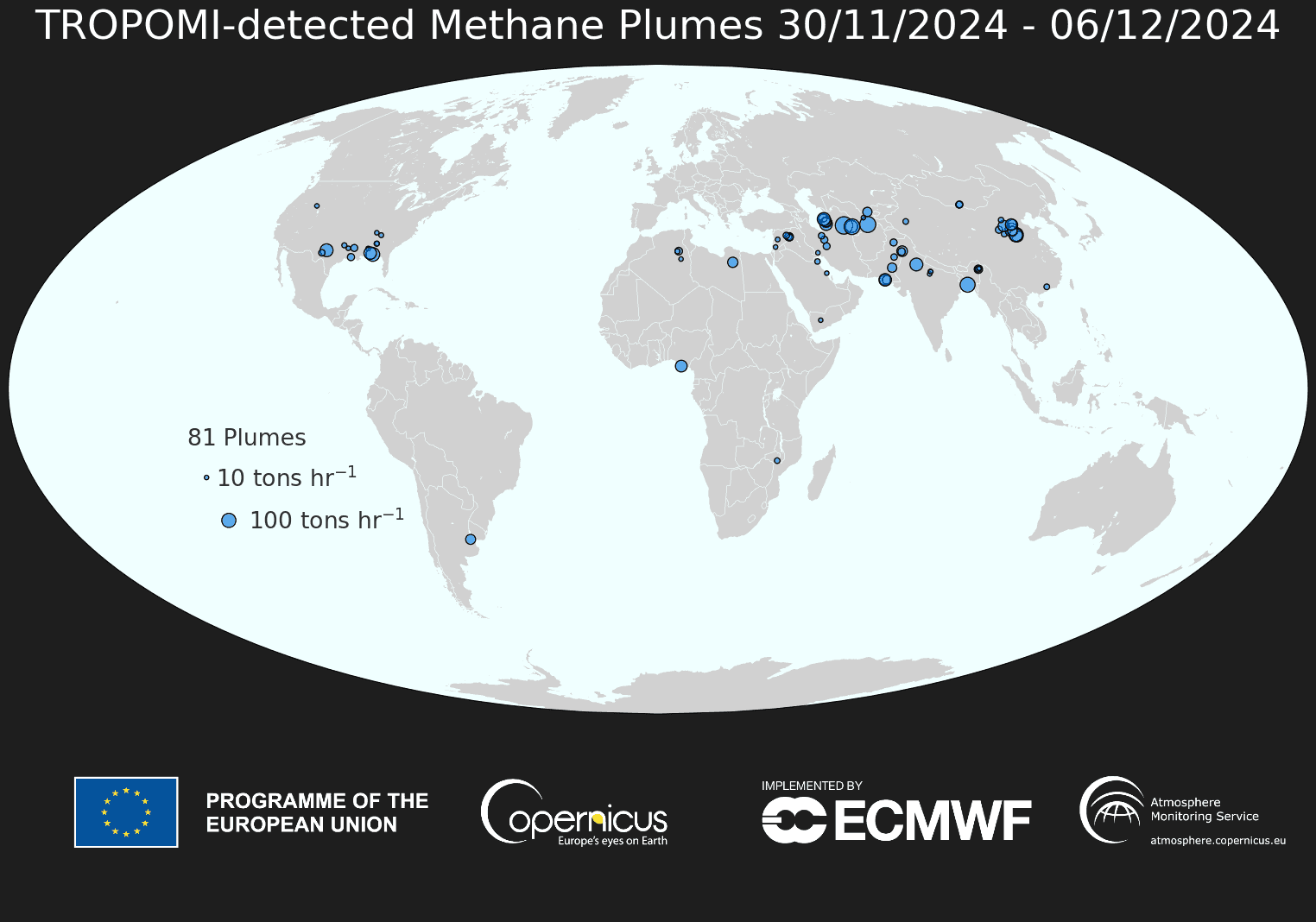

Creative Commons Attribution 4.0 International License. Detections were generated using Copernicus Atmosphere Monitoring Service information [2024], using the Schuit et al. (2023) model and validated by the SRON team; Copernicus (modified) Sentinel-5p data.

So, I ran my best model on a week of unlabelled satellite data. One week can have as many as 16,000 unlabelled images (4 times as many as in the labelled dataset). We couldn’t label all that, but we could plot the detections on a map and use SRON’s domain knowledge to gauge how accurate the predictions could be. And that produced some surprising results.

While my models were doing well on the labelled data, I had problems with the use case:

Furthermore, my model tended to misclassify empty images as plumes much more than in the labelled data.

My results on the use case showed a discrepancy between the test set results and what we saw in real-world data. And just as finding plumes is finding a needle in a haystack, finding the reason for this problem was tricky. I felt I was missing some key insight about the data I hadn’t considered in the processing pipeline.

I tried to solve the problem by reformulating my problem statement. I made a new list of the problem's properties and dataset, like class imbalance. Then, I matched each property with an ML strategy to address the problem.

First, I tried a lot on the modelling side, for instance:

But, it was tough to create any solutions given that the accuracy didn’t really map to what we saw in real-world data. I bypassed this by regularly running inference on real-world data to keep a finger on the pulse.

Then I started thinking the problem may be the data. I thought about properties of the dataset, like:

The more I started to understand the data, the more I could fiddle with the data part of the pipeline. So I started looping back: changing parts of the preprocessing, then trying to measure performance. Or I tried resampling the dataset to make it more balanced. Eventually, none of these solutions made a big impact. The problems with my model persisted.

The answer came when I realised I couldn’t stay in abstract ML land forever. I had been really focused on the ML model development. I wanted to isolate a problem and make a toy dataset like MNIST. Instead, I learned that real-life data like the TROPOMI scenes are inherently biased, noisy and unbalanced. We couldn’t change anything about it. These challenges also meant that designing benchmark datasets for this problem is not trivial. So I had a choice: either stay in denial about this dataset being specific or lean in.

Eventually, I found a compromise. From the start, I really wanted an end-to-end model. But what made my pipeline work well was a separate step that filtered the data according to the primary modality. It's a step from the original pipeline that I tried to remove to make my model end-to-end.

I re-generated results, reran the analyses and finalised the paper draft. We submitted the paper after more than 2 years of work. As of the time of writing, I’m waiting for the reviews.

It’s important to understand that this project was much more complicated than anything I had done before. As a result, I needed to learn much more before I could worry about designing an ML4EO pipeline. Two conditions of this project really fast-tracked my learning:

A big difference with the previous projects is that there was already a pipeline. All I had to do was improve or extend it. But it was also difficult. Instead of solving problems one by one, I could now start tweaking any part of the pipeline. And instead of learning about one pipeline design step at a time, I had to understand all of it before I could make my own contributions. Because of this, I learned a lot of things very fast.

Second, I could iterate much faster with help from my SRON group members. They helped me with many data-related problems:

Without these two factors, it would have taken me much longer to learn as much about the data and try so many different things.

To summarise, these were the high-level steps I took:

Compared to the previous projects, technological aspects like libraries or data processing were barely a problem anymore. I could also find ML techniques to address any problem I came up with. The implementation wasn�’t that hard; it just took time. But what caused me to backtrack again and again was that I was learning how to formulate a problem. A big challenge of ML4EO is that the datasets you’re working with often don’t have a single challenge like class imbalance, but many at the same time. You need to:

This was the final blog sharing my experiences in ML4EO projects. In the next blog, I’ll give you tips on how to develop your ML4EO skills.