June 2, 2023

I spoke to Solomiia Kurchaba, a PhD student at the Leiden Institute for Advanced Computer Science (LIACS), Leiden University. We met when I was still a master’s student. I’m grateful to have Solomiia as a colleague. Especially when I had just started, it was very helpful to learn a bit about the experiences of someone who already works multiple years on ML solutions for EO.

Can you introduce yourself?

I’m working on data-driven methods for estimating NO2 emissions from ships to monitor compliance with International Maritime Organization (IMO) regulations w.r.t. emissions. My background is not in computer science but in physics.

Can you tell me about you research?

The idea is to estimate how much NO2 is produced from individual sea-going ships using TROPOMI data. I address this problem step by step in my work. The first step is associating data signals with individual ships [1]. The next step is to do semantic segmentation of the ship plumes [2]. My latest work is detecting anomalous ships: this information can be used to flag ships for manual onboard inspection of compliance with the IMO regulations. [3]

How do you use ML in your work?

In different ways. For instance, in one of my papers [2], I use ML to segment ship plumes. In this case, the task of ML is to automatically do the work that is too difficult to domanually and to enable scaling up of the process.

We need to observe ships over a certain period and process data globally to perform monitoring to comply with international regulations. Because there are so many ships, it quickly becomes a big data problem. It’s challenging to segment every ship's plumes globally by hand. ML can help with this.

The story is a little different in my last paper [3]. There we use regression methods to supplement the semantic segmentation: we estimate functional dependencies between one variable and a set of other variables using ML.

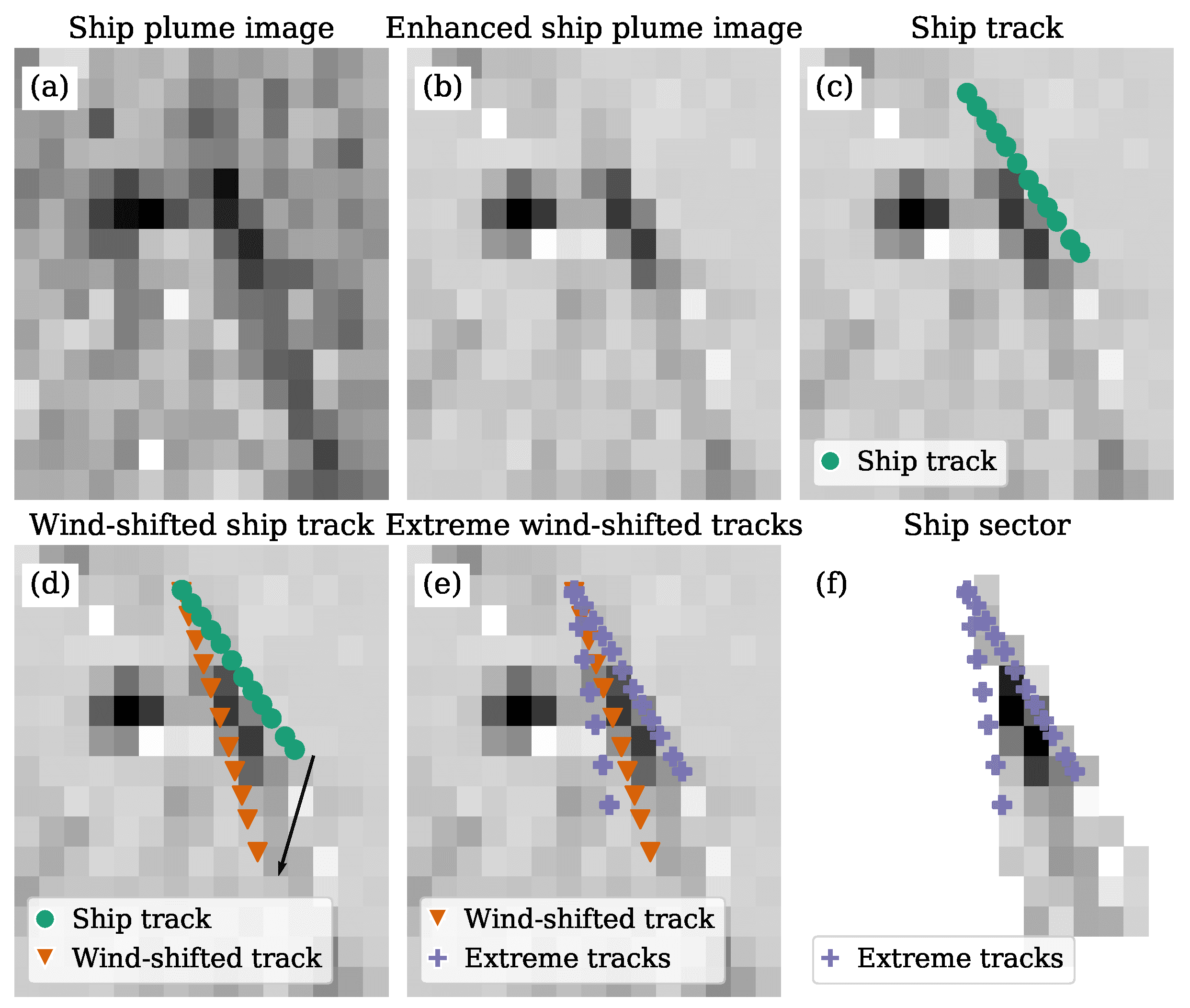

Photo Credit: Kurchaba et al. 2023 [3]

Ship sector definition pipeline. This figure shows how the borders of the ship plume are defined. Licence: CC-BY-4.0.No changes were made to the original image.

How did you learn about ML?

We had a lot of courses on statistics during my physics studies. There were also some opportunities to play with data, like preparing reports from lab experiments. I really liked all that.

Then, there was one elective course on ML in my institute. Some former PhD student who was working at Google at that time came and told us how cool ML is and showed us some stuff.No one knew anything about ML back then in my physics institute. Even professors were attending that course. src/images/julia.png I got excited and wanted to learn more. So I decided to apply for an ML internship. I felt very lucky to be able to spend a summer with the ML group at Brussels University. I was so bad at it but learned a ton back then! I found another internship when I returned to Poland, this time in a start-up. Later, I was offered a full-time data science job at that company. Eventually, I decided that I wanted to return to academia, so here I am doing my PhD :).

Where do you find information about ML?

If I need high-level information, I look for a blog post on a website like medium.com or towardsdatascience.com, all those blog-like internet pages. If I want something deeper, I will go for an article. But I also learn a lot from conferences. For instance, it can give me ideas I would like to try.

What is your biggest struggle with using ML?

To be honest, there are many challenges in my work. It depends on the level we are looking at. If we’re talking about segmentation models, there is no annotated data. I have to annotate the data manually. It’s enormous work. The limited availability of labelled data consequently restricts the complexity of the models you can create.

Also, there is no ground truth for the segmentation of plumes. It is unclear where a plume starts and ends. Moreover, the resolution of the data is very low [3.5 by 5.5 km^2], which makes it even harder to annotate the data properly. You have no guarantee that your labels are correct.

Another challenge is that my data only contains a single channel: the one containing NO2 concentrations. There are no other supplementary sources to improve the data or to add more information.

What is your favourite thing about ML?

We use ML not because we like it but because it’s a valuable tool. It gives us solutions to problems that cannot be tackled easily. You can really get into the data and try to extract as much information as possible. That might be what is really cool about ML.

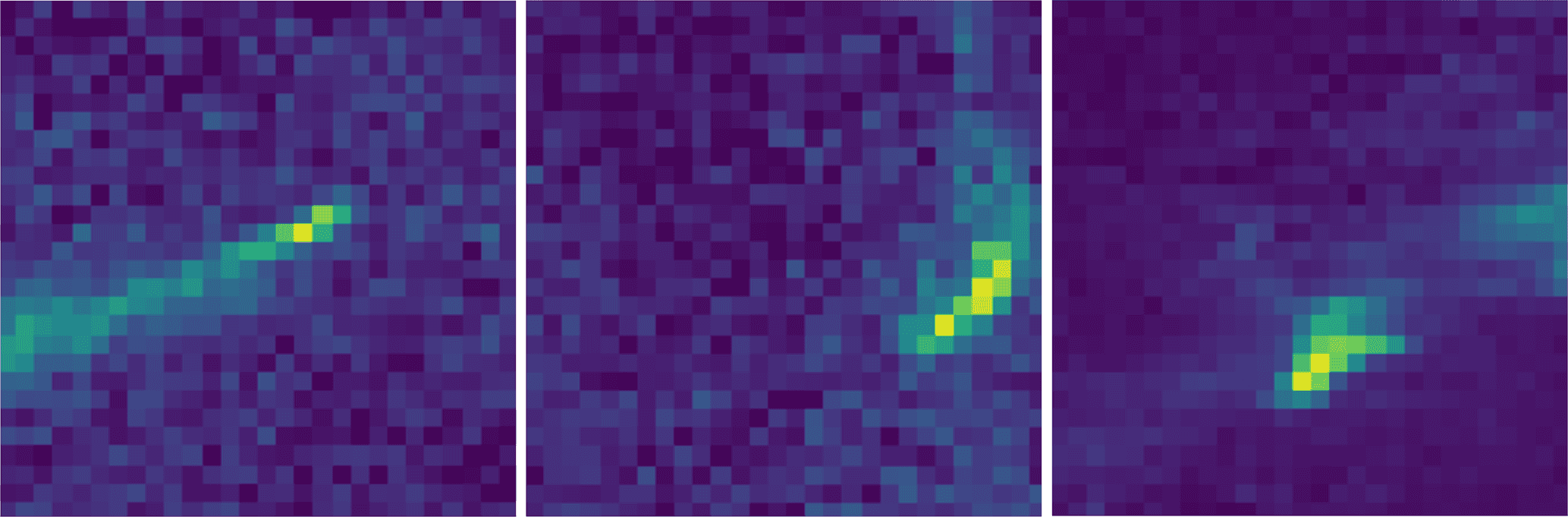

Photo Credit: Finch et al. 2022 [4]

Three examples of NO2 plumes obtained from TROPOMI. Licence: CC-BY-4.0. No changes were made to the original image.

What are you missing to use ML better in your work?

Better data! I struggle all the time with finding the right technique that will work for this data, a technique that can extract any information, which is very difficult. I would need higher-quality data and more labels.

We might get more pre-trained models on similar types of data. That would be helpful. Now, when I go on ArXiv and look for available methods, I face the problem that it will likely not work on my data.

For NOx, we don’t have satellites that provide higher resolutions yet. But that won’t entirely fix the problem: if you want to combine data from different satellites, they must be perfectly synchronised. Ships move all the time, and plumes, too. Also, NO2 plumes don’t live long: the gas quickly dissipates in the atmosphere. It will be hard to get useful supplementary information if the satellites are not in sync.

How do you see the future of ML in EO?

There is tons of data that must be processed and tons of problems currently solved by analytical solutions. The potential for ML is enormous here. Analytical solutions are often hugely computationally expensive. We can use ML to replace these methods with something much faster.

There is so much data coming from everywhere, all kinds of instruments.

It’s about taking it and using it. However, currently, there are almost no open repositorieswith ready-to-use datasets. Only raw data. Raw data takes a lot of effort to preprocess. It makes it hard for computer scientists unfamiliar with EO data to use and develop methods for. Moreover, data is not always shared with academic publications, even though there are a lot of conversations about this at conferences.

Remote Sensing and EO data is very diverse. For instance, tons of datasets are in the visible part of the spectrum. There are competitions, examples of segmentation of ships from visible spectrum satellites, etc. But in atmospheric science, climate data is not that available yet, at least in my view.

This situation needs to improve. Once there is better data availability for ML purposes, more and more ML scientists will be interested in developing their methods on EO datasets.

Photo Credit: Kaggle

The top examples when searching Kaggle for satellite datasets: all of them in the visible spectrum.

Apart from the data, many examples of EO papers use ML. However, sometimes they contain few details about the model. The lack of detail makes it difficult to reuse the methodology. ML is usually not an area of focus in the review process of EO journals. The reviewers focus on the physicality and conclusions regarding the application. All of this is very important, of course. But still, there needs to be an incentive to focus more on the reproducibility of ML methods. I think this issue arises from the integration of the two communities.

That doesn’t mean there aren’t people motivated to improve reproducibility. For instance, I attended a conference in climate informatics that hosted a reproducibility challenge. Moreover, during each panel, there was a dialogue about how reviewers need to be stricter about how the method is evaluated, whether all configuration parameters are present in the publication, and other factors related to the reproducibility of the methods. I also see new journals appearing that focus on these issues.

We can also improve on the ML side. When talking to computer scientists, they often don’t really understand the data problem you’re facing—especially the specificity and applicability of the task. For most computer scientists, data is just the input to your ML model. The focus is on the properties of the developed model, not what goes into it. But in reality, it can be really challenging to find a method that works for data that is so specific.

Also, we shouldn’t be shy to use simple models—computer scientists especially like using big, fancy models. It is considered boring when a problem requires a simpler solution. But I think it is crucial always to use the simple things first when the problem is new. Especially in the case of EO data, where it can be hard to find a model that works.

Why use a tank to swat a fly when you can use a piece of paper? For instance, I saw a conference talk where they said: “We tried everything, but the only thing that works is ridge regression”. And I believe them. It can be like that.

[1] Solomiia Kurchaba, Jasper van Vliet, Jacqueline J Meulman, Fons J Verbeek, and Cor J Veenman. 2021. Improving evaluation of NO2 emission from ships using spatial association on TROPOMI satellite data. In 29th International Conference on Advances in Geographic Information Systems. 454–457. https://doi.org/10.1145/3474717.3484213

[2] Kurchaba, S.; van Vliet, J.; Verbeek, F.J.; Meulman, J.J.; Veenman, C.J. Supervised Segmentation of NO2 Plumes from Individual Ships Using TROPOMI Satellite Data. Remote Sens. 2022, 14, 5809. https://doi.org/10.3390/rs14225809

[3] Kurchaba, S., van Vliet, J., Verbeek, F. J., & Veenman, C. J. (2023). Detection of anomalously emitting ships through deviations from predicted TROPOMI NO2 retrievals. Remote Sensing of Environment. 2023, 297, 113761. https://doi.org/10.1016/j.rse.2023.113761

[4] Finch, D. P., Palmer, P. I., and Zhang, T.: Automated detection of atmospheric NO2 plumes from satellite data: a tool to help infer anthropogenic combustion emissions, Atmos. Meas. Tech., 15, 721–733, https://doi.org/10.5194/amt-15-721-2022, 2022.