May 22, 2023

Last weekend I used ChatGPT & DALL-E for the first time. I'm not sure whether I'll use them again. Here’s why.

One of our professors asked us who wanted to help at the AI Playground organised at the local science history museum. I thought it was great that the museum reached out to the university, and I was happy to help. We helped people with using some generative AI platforms, and explained them how it works.

It might surprise you, but, it was the first time I used ChatGPT & DALL-E. I can joke and say every time I tried, ChatGPT was overloaded. In truth, I have struggled with explaining why without the whole thing escalating into a rant about how AI is treated in the public debate. In this blog,

I untangle why I don’t use generative AI* through 3 main issues : what these models can and cannot do, the environmental impact, and a more semantic point: there’s more to AI than generative models. I will mostly be talking through the lens of ChatGPT.

Photo Credit: Jan van Rijn

Photo courtesy of Jan van Rijn. LIACS master- and PhD students at the AI Playground, with me on the right.

Look, there are already many blog posts and papers on how ChatGPT works. I will keep it short. Generative AI are like very high-tech parrots that produce content (text, images, video, etc) that we humans interpret as meaningful. Roughly speaking, there are 3 scenarios I would consider using ChatGPT or DALL-E for:

In all 3 cases, I don’t use generative AI. I like writing; there’s enough free images on websites like pexels/unsplash or I draw something myself; and I don’t encounter a lot of boilerplate code in my work.

Why don’t I list more cases? People seem to be using ChatGPT for all sorts of things. There are scenarios where it makes sense (like summarising text), and ones where it makes less sense (searching for factual information). ChatGPT is just a language model. It’s not an accurate source of information: any time I ask for a bit of information, I’d need to check whether it is true anyways. It has been trained in producing language, not in reasoning or in indexing the internet like search engines do. In that case I ask myself: why not start with google right away?

It irks me that people use generative AI just for fun. And that OpenAI is so, ehm, unopen. The environmental impact of ChatGPT is huge. Estimating the carbon footprints of AI is a whole field of science (for instance, check out the Green AutoML project), so I encourage you to find information about this yourself. Here are some estimates I found:

I’m not saying that I’m a perfect angel regarding carbon footprint, or even that all of us should be in our individual behaviours (listen to this podcast about individual actions v.s. big, systemic change to fight climate change). But my research is about combating climate change, so I try to be mindful.

There’s a lot of talk about AI lately. Theoretically, I should be happy about that. But it has become painfully clear to me that if a headline reads “AI”, “generative AI” is implied. Obviously, companies like OpenAI have really strong PR campaigns that (unintentionally?) usurp the complete AI debate.

As an AI researcher, that hits me in the feels. There is so much amazing work being done using AI for all kinds of applications like flood prediction or supporting doctors in the diagnosis of diseases like cancer [5].

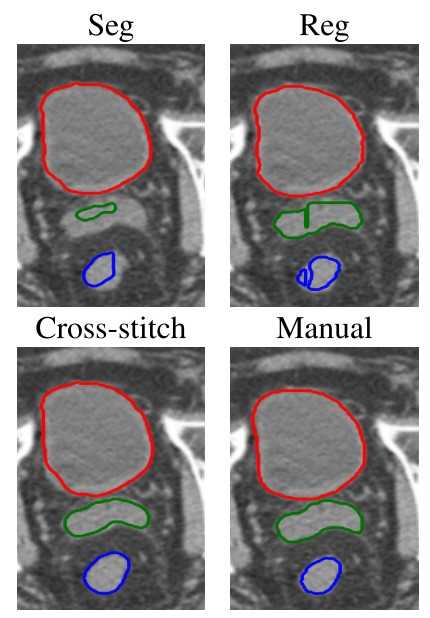

Photo Credit: Elmahdy et al. (2021)

Image depicting AI-powered registration and segmentation of radio images of a prostrate.

The grief for the attention that these super relevant works do not get inhibits me from using generative AI. I tend to think these works have higher societal relevance and thus deserve more attention. This stance is not productive, even petty: not using it will not magically re-distribute the public’s attention. But I would be lying if I said it does not play a significant role in my choices regarding generative AI.

My reasons for not using ChatGPT or DALL-E are very personal. I want to make clear that I really do understand how people can benefit from what technology like ChatGPT has to offer. However, I would need a few things to change to use generative AI. Both in the technology and in myself.

First, new technology requires you to adapt your habits. I’m very used to figuring out myself how to write bash scripts or digging through old code to find some generic neural network training code. ChatGPT could generate this code for me, but it simply doesn’t occur to me to use it. I’m old school like that. However, with time, I think I could learn to integrate it into my workflow. Changes in the technology could make this easier. For instance, Github Copilot fits me like a glove because I can use it directly in my editor of choice (VSCode) without changing much about the way I work.

Second, I would like there to be more openness about how ChatGPT operates, and how user data is used. I need this for my own peace of mind, but I also want to be careful with information regarding my research. Not necessarily for myself, but out of respect for my collaborators.

Last but not least, I have to be a little less stubborn. This will not be the last time in my AI career that I will feel AI is misrepresented in the public discourse. It’ll need some growth on my part to let that go.

What’s the takeaway? In more general terms, I don’t think generative AI is at the level that it is threateningly good. Nevertheless, it’s absolutely necessary to talk about that scenario. I think it’s normal that people are scared. At least history tells us so: this happens with every big breakthrough.

I think the most important thing is to be conscious and responsible when using new technology. Be it AI or any other gadget.

[1] Emily M. Bender, Timnit Gebru, Angelina McMillan-Major, and Shmargaret Shmitchell. 2021. On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? 🦜 In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency (FAccT '21). Association for Computing Machinery, New York, NY, USA, 610–623.https://doi.org/10.1145/3442188.3445922

[2] Kasper Groes Albin Ludvigsen. March 2023. ChatGPT's electricity consumption, pt. II. Medium. URL: https://kaspergroesludvigsen.medium.com/chatgpts-electricity-consumption-pt-ii-225e7e43f22b. Retrieved at: 06-06-2023.

[3] Milieu Centraal. 2023. Gemiddeld Energieverbruik. URL: https://www.milieucentraal.nl/energie-besparen/inzicht-in-je-energierekening/gemiddeld-energieverbruik/. Retrieved at: 06-06-2023.

[4] Patrick Mineault. March 2023. How much energy does ChatGPT use?. xcorr. URL: https://xcorr.net/2023/04/08/how-much-energy-does-chatgpt-use/. Retrieved at: 06-06-2023.

[5] M. S. Elmahdy et al., Joint Registration and Segmentation via Multi-Task Learning for Adaptive Radiotherapy of Prostate Cancer, in IEEE Access, vol. 9, pp. 95551-95568, 2021, doi: 10.1109/ACCESS.2021.3091011.